News Archive

Siemens awarded the "Inventor of the Year" prize to Hila Safi for her work at LFD on how quantum computing can be used to solve industrial problems. In doing so, the LFD's Hardware-Software Co-Design approach was recognised. Attention to the topic was drawn by the patents filed in connection with this work. The idea involves using a digital twin to simulate potential errors and determining the optimal location for quantum computers outside of laboratory environments.

Congrats, Hila!

Find further information at Siemens, LinkedIn, or Konstruktionspraxis.

We are happy to welcome three new colleagues to the laboratory!

Vincent Gierisch, Dominik Köster, and Nils Rabeneck have joined the team as doctoral students in the fields of quantum computing and software engineering, contributing to the AIM-SMEs project.

Welcome, all three of you!

Oliver von Sicard has joined the team as Senior Researcher in the field of software engineering and artificial intelligence, contributing to the AIM-SMEs project.

Welcome, Oliver!

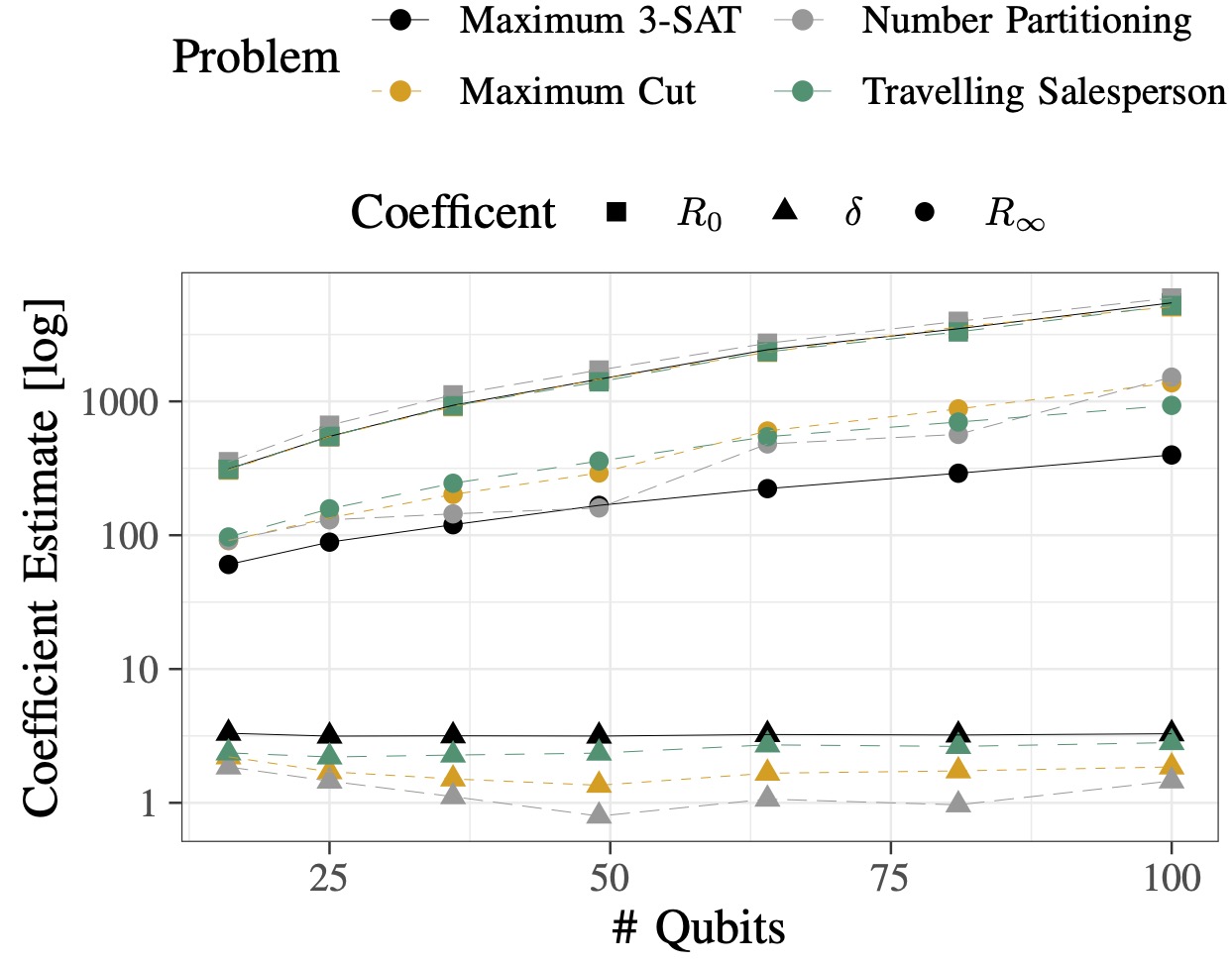

New paper published in the Q1 journal Quantum

We are happy to announce the publication of our latest paper on quantum algorithms by Tom Krüger and Wolfgang Mauerer.

Pobular Summary

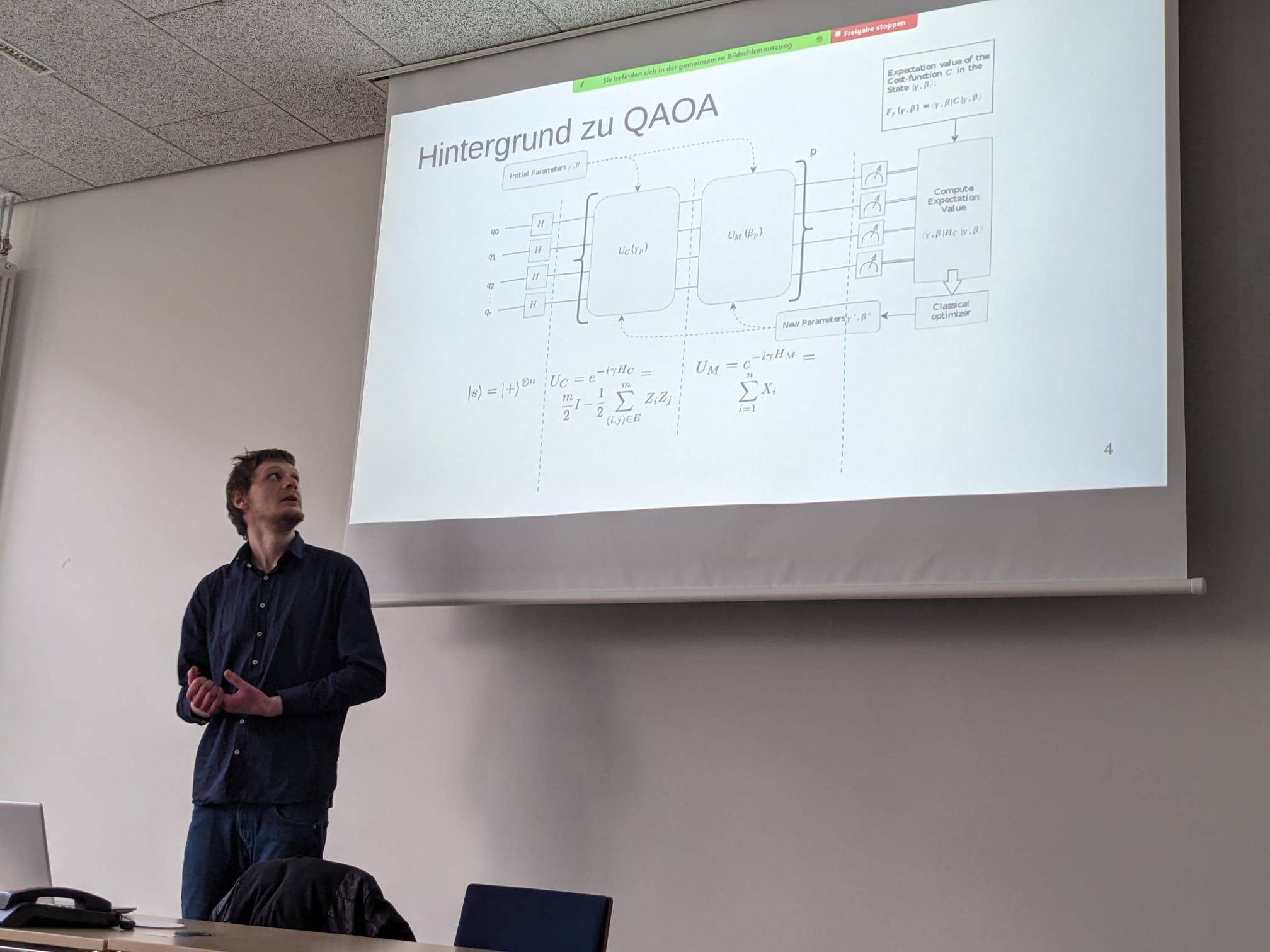

Variational quantum algorithms (VQA) became extremely popular in the current era of quantum computing. Here a parameterised circuit ansatz gets classically optimized for each instance to be solved. In order to harness the biggest quantum advantage the classical parameter optimization costs have to be reduces as much as possible. Based on a sound theoretical underpinning proving a long standing empirical conjecture we derive a new non-iterative variant of the unit-depth version of the most common VQA – the Quantum Approximate Optimisation Algorithm (QAOA) – that works without instance specific parameter optimization.

In this work, we provide a sound theoretical explanation and proof of the longstanding empirical conjecture of the existence of problem wide structures that cause instance optimal QAOA parameters to cluster. More specifically, we derive an error bounded structural approximation theorem that shows how the expected unit-depth QAOA parameter optimisation landscape can be precisely approximated by structural information about the problem solution spaces. For problems with constant sized solution spaces our approximation is even exact. We further provide an extensive set of examples building on each other to introduce possible applications of our approximation method. After that we show that parameter optimisation on this approximate landscape yields as set of problem wide close to optimal parameters, resulting in a non iterative version of QAOA that matches standard QAOA.

We thus have two main contributions to build upon: The landscape approximation theorem, that opens up research on QAOA to the vast body of research in theoretical computer science on the structure of solution spaces. And second, a strong theoretical underpinning for non-iterative QAOA methods.

New contribution to the CORE A* VLDB conference

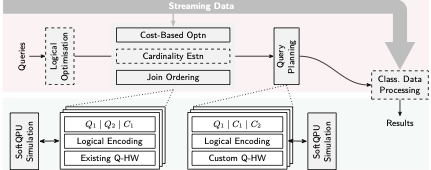

We are delighted to share that our latest paper on data management, driven by Manuel Schönberger, Immanuel Trummer, associate professor of computer science at Cornell University, and Wolfgang Mauerer, has been accepted at VLDB 2026.

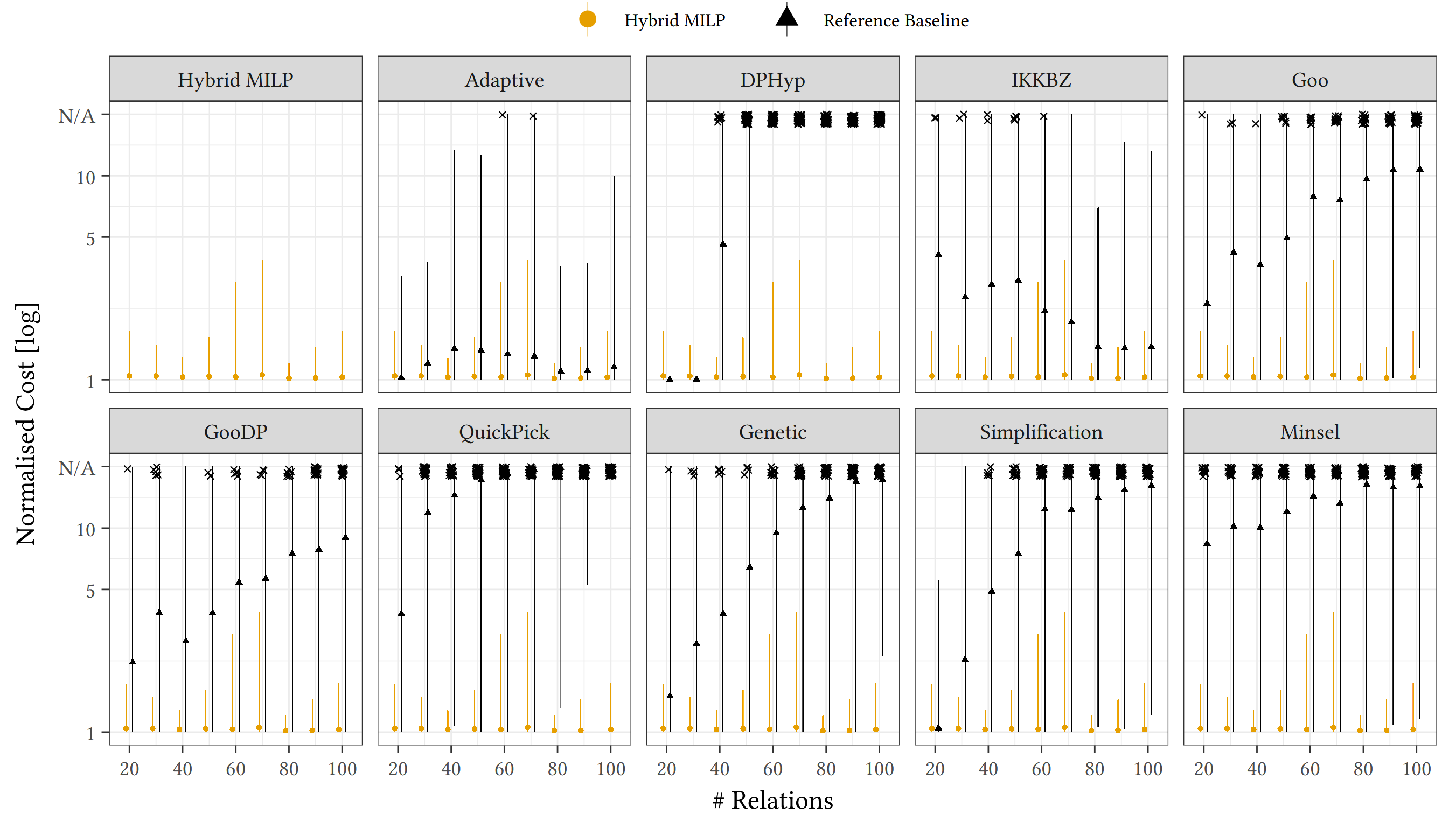

Our paper proposes a novel hybrid join ordering algorithm that leverages mixed integer linear programming (MILP) solvers in conjunction with efficient complementary methods. Thereby, our approach achieves remarkable robustness, and improves over a large variety of competitors for large-scale query loads joining up to 100 relations.

Abstract

Finding optimal join orders is among the most crucial steps to be performed by query optimisers. Though extensively studied in data management research, the problem remains far from solved: While query optimisers rely on exhaustive search methods to determine ideal solutions for small problems, such methods reach their limits once queries grow in size. Yet, large queries become increasingly common in real-world scenarios, and require suitable methods to generate efficient execution plans. While a variety of heuristics have been proposed for large-scale query optimisation, they suffer from degrading solution quality as queries grow in size, or feature highly sub-optimal worst-case behavior, as we will show.

We propose a novel method based on the paradigm of mixed integer linear programming (MILP): By deriving a novel MILP model capable of optimising arbitrary bushy tree structures, we address the limitations of existing MILP methods for join ordering, and can rely on highly optimised MILP solvers to derive efficient tree structures that elude competing methods. To ensure optimisation efficiency, we embed our MILP method into a hybrid framework, which applies MILP solvers precisely where they provide the greatest advantage over competitors, while relying on more efficient methods for less complex optimisation steps. Thereby, our approach gracefully scales to extremely large query sizes joining up to 100 relations, and consistently achieves the most robust plan quality among a large variety of competing join ordering methods.

New funded project: Accelerating AI-based Innovations in SMEs (AIM-SMEs)

In our new project (with a funding volume of more than 2.1 Million EUR), the lab will, with Nicole Höß as coordinator, closely collaborate with small and medium sized enterprises on enabling concrete use-case for machine learning and artifical intelligence. Many inside and outside the computer science domain, including the EU with their STEP initiative, see these topics as crucial for the future.

We are looking forward to working with our many collaboration partners on exciting and innovative endeavours!

At this year's dtec.bw Annual Conference at Helmut-Schmidt-University Hamburg, the LFDR was represented together with UniBw München to showcase the project MuQuaNet.

One of the highlights at our booth: the QKD charging station, which enables even conventional phones to use quantum-secure communication. Based on the BBM92 protocol, the demonstration showed how research can be translated into practical applications for future-proof cybersecurity.

With over 50 projects on display, the conference once again underlined the role of dtec.bw as a driver for innovation and technology transfer.

Funded projects within the DFG Priority Programme 2514

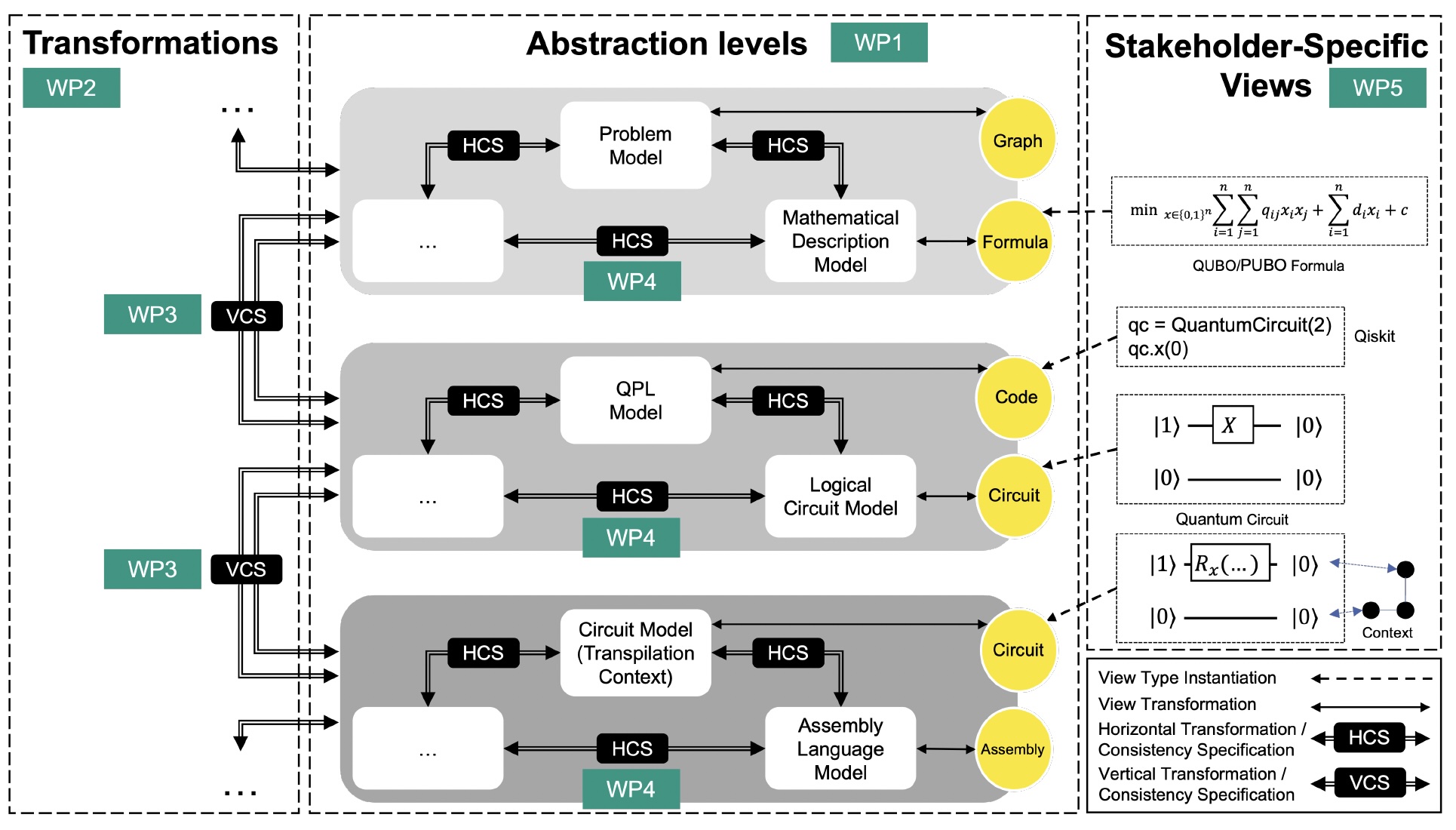

The priority programme on quantum algorithms, software and systems by the German Research Council (DFG, SPP 2514) has finished selecting partipating research projects. We are happy that our proposal MoQel: Seamless Development of Quantum Software with Stakeholder-Specific Views has been selected by the reviewers! We will carry out the work with our valued colleagues from Prof. Schaefer's group at KIT, continuing an existing collaboration that addresses how appropriate and efficient abstractions can ease the programming of discrete and analogue quantum systems.

Additionally, we will contribute to infrastructural work on quantum reproducibility that intends to establish high standards of re-use across researchers in a complex and new domain where not just ideas, but even the underlying hardware still changes frequently.

LfD successfully contributes to many aspects of QCE'25

The laboratory is thrilled to have successfully placed nine contributions to IEEE QCE in Albuquerque, USA—the largest event for everything that relates to the scientific and engineering aspects of quantum computing!

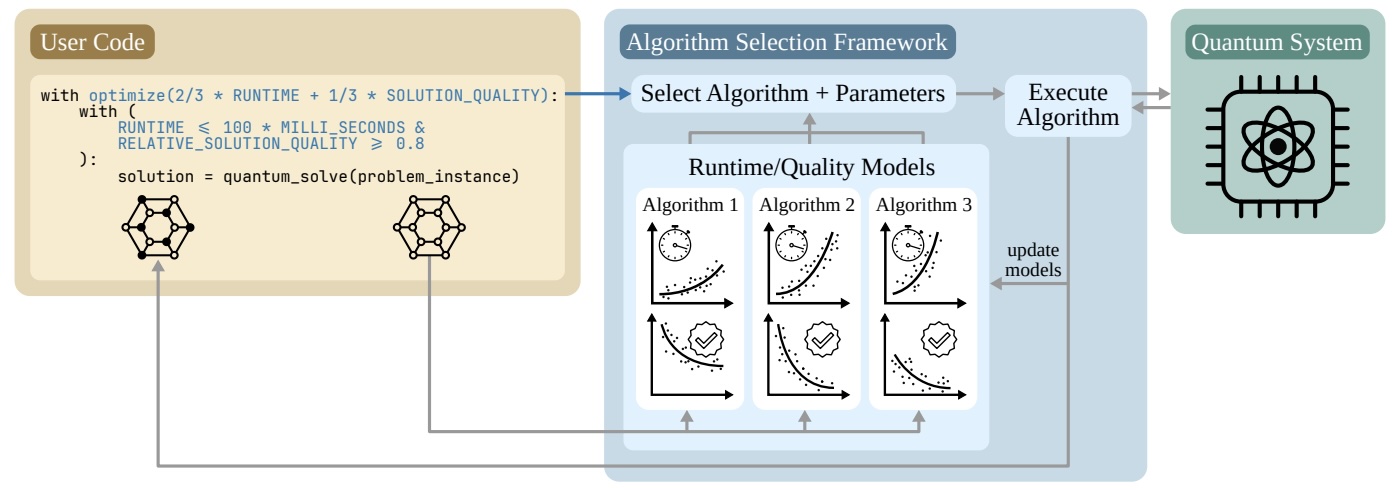

- Predict and Conquer: Navigating Algorithm Trade-offs with Quantum Design Automation by Simon Thelen in the QSYS main track

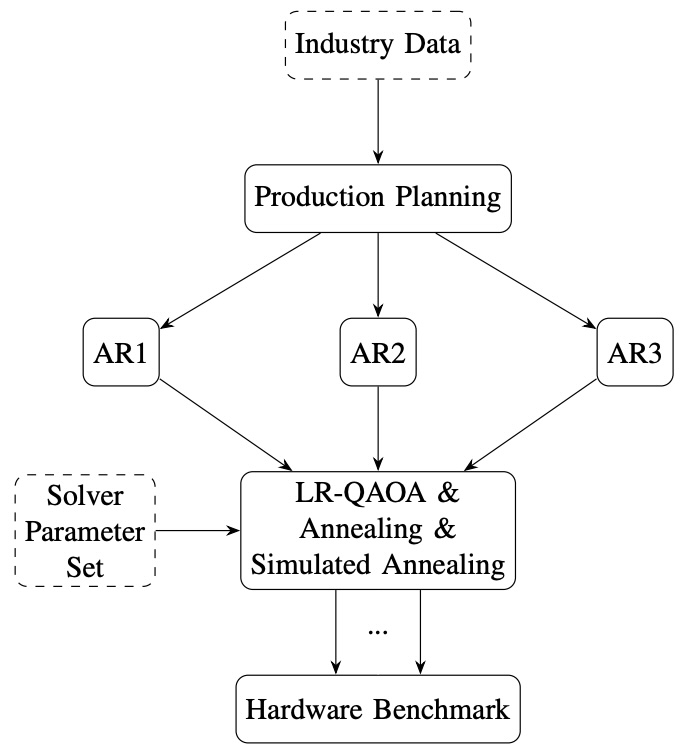

- Path Matters: Industrial Data Meet Quantum Optimization by Lukas Schmidbauer and colleagues from BMW and OptWare in the QAPP main track

- CutReg: A loss regularizer for enhancing the scalability of QML via adaptive circuit cutting by Maniraman Periyasamy and colleagues from Fraunhofer IIS at the 3rd QML@QCE

- TWiDDle: Twirling and Dynamical Decoupling, and Crosstalk Noise Modeling by Hila Safi and Christoph Niedermeier from Siemens Foundational Technology at the 5th QSET@QCE

- Towards System-Level Quantum-Accelerator Integration by Ralf Ramsauer at the 5th WIHPQC@QCE)

Congrats to everyone involved in this comprehensive effort, and many thanks to our cooperation partners that we enjoyed working with to realise this success!

Shonan Meeting of the NII brings quantum software researchers together

The NII Shonan meeting, hosted by the Japan national institute of informations, is an invite-only event that took part near Tokyo (followed by the QSE meeting directly in Tokyo). As fundamental questions remain unsolved about the actual roots of quantum advantage, finding good software mechanisms that will allow researchers and programmers to utilise them in the future without too much actual knowledge of quantum internals is a challenging problem that was discussed at length by the international crowd of leading researchers in the field.

Participants examined how classical engineering principles apply—or need to be rethought—in the context of quantum technologies, but also enjoyed insights from the industry on the latest progress in sofware aspects of low-level control of quantum computers, and new approaches to the quantum-classical compute continuum.

First native PhD degree at the laboratory awarded to Manuel Schoenberger!

Manuel Schönberger successfully defended his PhD thesis before an internationally acclaimed commitee comprising Prof. Dr. Immanuel Trummer from Cornell University, Prof. Dr. Kurt Stockinger from University of Zürich and Zürcher Hochschule, Prof. Dr. Jürgen Frikel from OTH as head of the committee, and Wolfgang Mauerer as Manuel's supervisor and first examiner.

Some could argue that Manuel's excellent dissertation that produced three CORE A* papers, which is likely only matched by few dissertation projects, even lead to the first native PhD that was ever awarded at OTH Regensburg, and has not only broken new ground, but set high standards for any upcoming efforts. Congrats, Manuel!

PhD Student Lukas Schmidbauer has been awarded the best student paper award at the IEEE International Conference on Quantum Software (QSW) 2025 in Helsinki!

The Quantum Software Week (QSW) is part of the IEEE Services Conference, which takes place annually. With an acceptance rate of 22.86% for 2025, it ascertains high quality standards. The conference featured a deep insight into the upcoming developments and challenges in quantum software.

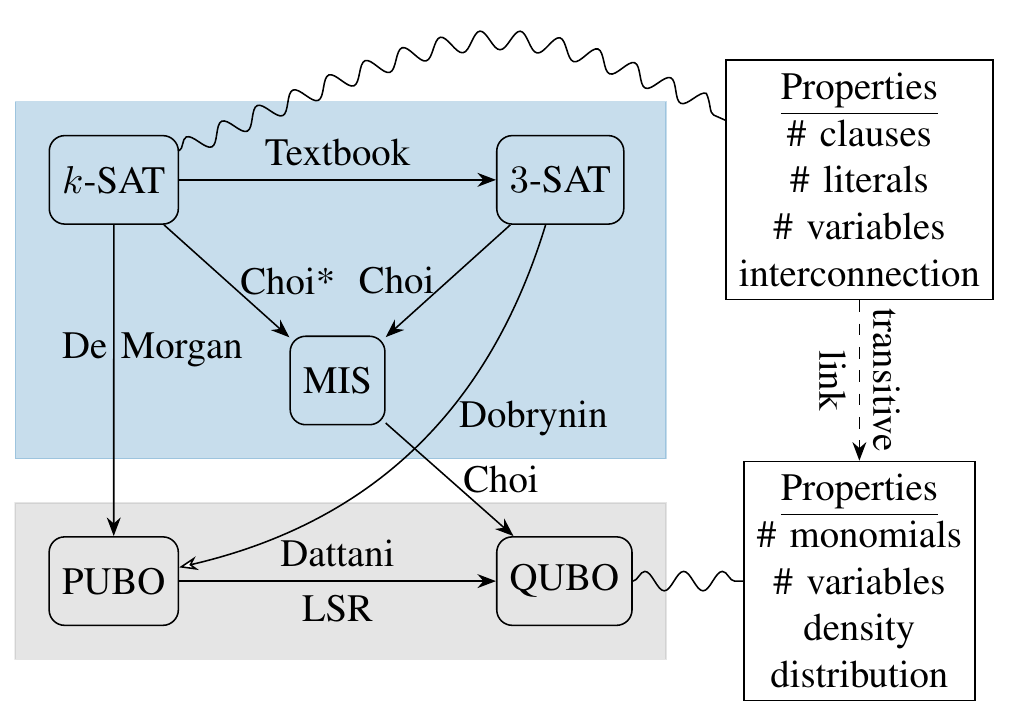

In his work, Lukas Schmidbauer analyses the applicability of quantum software to industry problems and makes important contributions to automatic quantum toolchains that ultimately map high-level descriptions of problems to quantum hardware. Together with Wolfgang Mauerer, he demonstrates that the plethora of mapping approaches can be systematically broken down into (multiple) transformation steps. Furthermore, he shows that specific paths of transformations develop important properties in a predictable manner, which is an important step towards automated methods in quantum toolchains.

At head of the working group quantum of Münchner Kreis, Wolfgang Mauerer contributed to organising a meetup on recent developments in quantum computing at IBM Munich. It was a pleasure to enjoy insights from distinguished and internationally recognised speakers, including Markus Rummler, David Faller, Dr. Heike Riel, Utz Bacher, Dr. Elmar Mittereiter, and Robert Lahmann. Participants also enjoyed a tour through IBM's innovation lab that featured quantum and non-quantum exhibits.

New contribution to the CORE A* SIGMOD conference

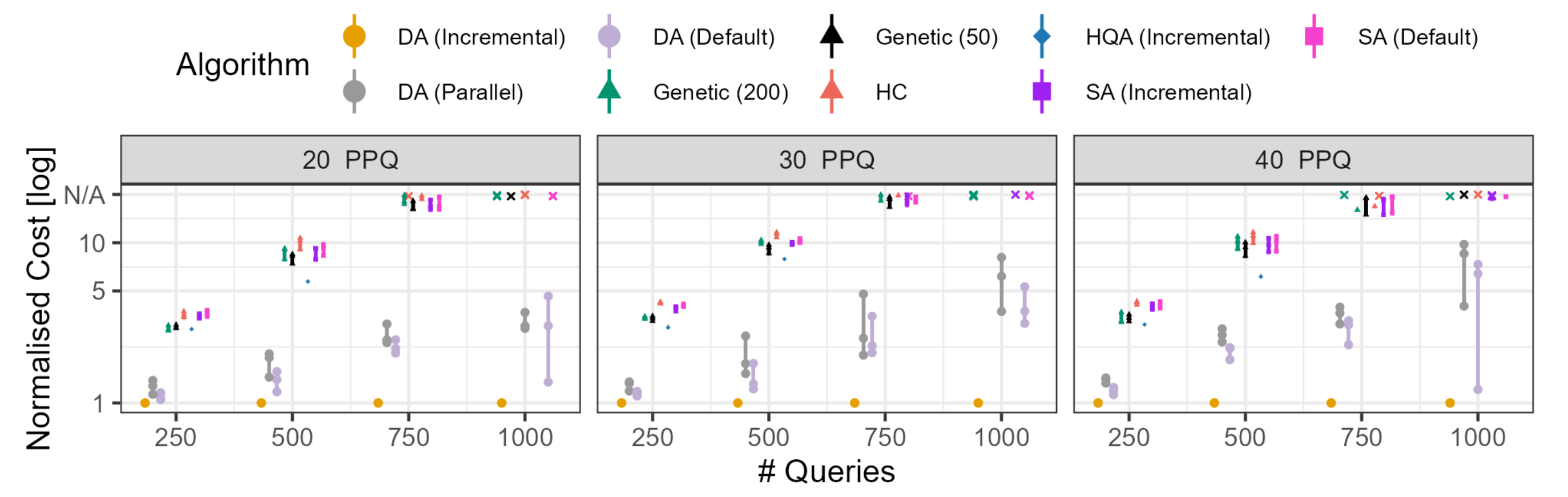

We are delighted to share that our latest paper on quantum data management, driven by Manuel Schönberger, Immanuel Trummer, associate professor of computer science at Cornell University, and Wolfgang Mauerer, has been accepted at ACM SIGMOD 2026. Our work uncovers the potential of quantum(-inspired) annealing for large-scale multiple query optimisation, using problem partitioning and incremental optimisation techniques for efficient search space exploration.

Abstract

Multiple-query optimization (MQO) seeks to reduce redundant work across query batches. While MQO offers opportunities for dramatic performance improvements, the problem is NP-hard, limiting the sizes of problems that can be solved on generic hardware. We propose to leverage specialized hardware solvers for optimization, such as Fujitsu’s Digital Annealer (DA), to scale up MQO to problem sizes formerly out of reach.

We present a novel incremental processing approach that combines classical computation with DA acceleration. By efficiently partitioning MQO problems into sets of partial problems, and by applying a dynamic search steering strategy that reapplies initially discarded information to incrementally process individual problems, our method overcomes capacity limitations, and scales to extremely large MQO instances (up to 1,000 queries). A thorough and comprehensive empirical evaluation finds our method substantially outperforms existing approaches. Our generalisable framework lays the ground for other database use-cases on quantum-inspired hardware, and bridges towards future quantum accelerators.

We are happy that our two full paper submissions to IEEE QSW (with a challenging 22% acceptance rate) have been accepted:

- Lukas Schmidbauer, Wolfgang Mauerer: SAT Strikes Back: Parameter and Path Relations in Quantum Toolchains

- Stefan Maschek, Jürgen Schwitalla, Maja Franz and Wolfgang Mauerer: Make Some Noise! Measuring Noise Model Quality in Real-World Quantum Software

As always, it was a joy to work with our collaboration partners at Eviden and the Karlsruhe Institute of Technology.

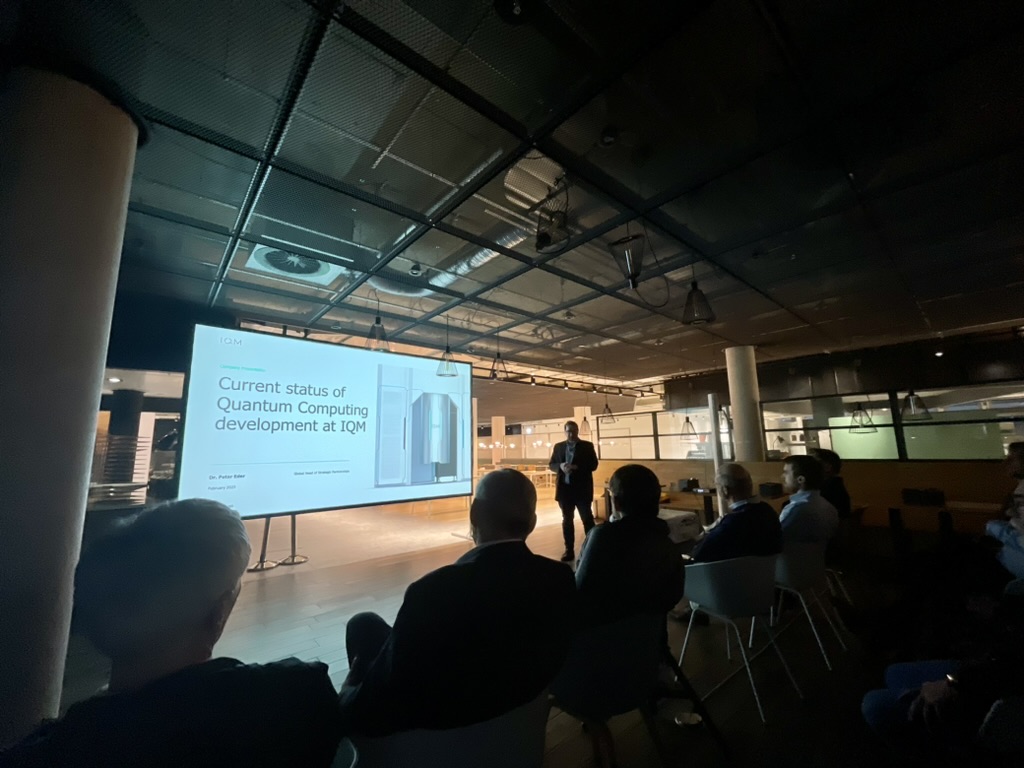

At head of the working group quantum of Münchner Kreis, Wolfgang Mauerer organised a meetup on recent developments in quantum computing at IQM Munich. It was a pleasure to enjoy technical details and the Finnish-German hospitality of one of the most advanced manufacturers of quantum computers. Participants were particularly impressed by the tour through IQM's newly opened quantum data centre.

Research Master student Benno Bielmeier successfully finished his thesis and continues his work in the team as doctoral student at the Systems Architecture Research Group. Congrats Benno! Congratulations also go to the two graduates Felix Wagner and Lukas Landgraf, who successfully presented their Bachelor's thesis.

Zwei weitere Unternehmen aus der Oberpfalz absolvierten erfolgreich das Förderprogramm KI-Transfer Plus.

Bei der Abschlussveranstaltung im Januar 2025 im House of Communication in München präsentierten die OmniCert Umweltgutachter GmbH aus Bad Abbach und die LÄPPLE Automotive GmbH aus Teublitz ihre KI-Transformation.

Ziel der LÄPPLE Automotive GmbH ist ein KI-gestütztes System, um Kundenanforderungen aus verschiedenen Portalen effizient zu verwalten und zu prüfen. Statt manueller Downloads und Prüfungen identifiziert die KI automatisch Freigabestände, überprüft Normen und stellt sicher, dass stets die aktuellsten und validierten Versionen verwendet werden. Eine intelligente Textanalyse kombiniert mit maschinellem Lernen analysiert Dokumente, erkennt relevante Inhalte und gleicht sie präzise mit bestehenden Werksnormen ab.

OmniCert erweitert seit 2024 den Einsatz von KI, um die Gutachtenerstellung effizienter zu gestalten. Dabei werden Prozessschritte wie die automatische Klassifikation, Ablage und Inhalts-Extraktion von Dokumenten automatisiert. Diese KI-gestützten Tools unterstützen Sachverständige und Gutachter bei der Zertifizierungsentscheidung. Eine besondere Herausforderung besteht in der Vielfalt der eingehenden Daten, die von unterschiedlichen Formaten bis hin zu handschriftlichen Unterlagen reichen. Zum Ende des Projekts kann die KI grundlegende Dokumenttypen erkennen.

Die im Projekt begonnenen Ansätze werden in einem gemeinsamen Forschungsprojekt mit dem RCAI weitergeführt, um die Fähigkeiten der Lösung schrittweise zu erweitern.

Wolfgang Mauerer was invited to participate in the renowned Dagstuhl Seminar on Quantum Software Engineering, a key event bringing together leading researchers to shape the future of this emerging discipline.

The seminar focused on the convergence of quantum computing and software engineering, addressing critical topics such as quantum software design, modeling, architecture, quality assurance, and the development of adaptive hybrid quantum systems. Participants examined how classical engineering principles apply—or need to be rethought—in the context of quantum technologies. The event also served as a platform for fostering community among researchers and for outlining a clear research roadmap.

All outcomes and discussions from the seminar are detailed in a comprehensive final report, contributing to the foundation and growth of Quantum Software Engineering as a discipline.

Wolfgang Mauerer, Vorsitzender Direktor des RCAI, organisierte und moderierte mit dem Bundesministerium für Digitales und Verkehr einen Roundtable in Berlin, um mit führenden Vertretern aus Industrie und Wissenschaft auf Bundesebene über die Zukunft von KI, Quanten- und Supercomputern in Deutschland zu diskutieren.

In zwei Paneldiskussionen sprachen (inter-)nationale Vertreter aus Wissenschaft und Industrie unter anderem darüber, welche Schritte nötig sind, damit die europäische KI-, QC- und HPC-Forschung (weiterhin) zur Weltspitze gehört. Dazu wurden konkrete Ideen diskutiert, um Synergien optimal zu nutzen und die europäische Wettbewerbsfähigkeit zu stärken.

Staatssekretär Tobias Gotthardt besuchte das LfD an der OTH Regensburg für einen Austausch zu aktuellen Entwicklungen im Quantencomputing in Akademia und Industrie. Anhand von interaktiven Demonstratoren und Vorträgen präsentierte das LfD ihre langjährigen Quanten-Aktivitäten in Forschung und Lehre. Es war uns eine Freude auch unsere Vertreter aus der lokalen Wirtschaft willkommen zu heißen, die interessante Einblicke in quanten-spezifische Vorhaben ihrer Unternehmen lieferten. Vielen Dank an:

- Dr. Johannes Klepsch, BMW

- Dr. Christoph Niedermeier, Siemens

- Dr. Sebastian Luber, Infineon

- Theresa Schreyer, Schäffler

- Dr. Christian Otto, OptWare

The LfD presented their ongoing work at the Smart Country Convention in the capital of Germany, Berlin. The SCCON covers a broad range of topics, including smart cities and the digitalisation of public services. It is organized by Bitkom, the industry association of the German information and telecommunications sector, and patroned by the Federal Ministry of the Interior and Community.

At the LfD exhibition booth, in the immediate vicinity of the Bitkom stand, Benno Bielmeier presented our contributions to the Digital Innovation Ostbayern (DInO) and the AI Transfer Plus (KIT+) projects, while Tom Krüger, Hila Safi, Lukas Schmidbauer and Simon Thelen from the quantum team showcased our results in quantum computing as part of the TAQO-PAM and QLindA projects to many interested convention visitors. The positive response we received from various high-level business and public administration stakeholders confirmed to us that there is significant interest in AI and quantum computing in these fields.

The convention featured many industry leaders and high ranking politicians, such as Cem Özedmir, Federal Minister of Food and Agriculture, Volker Wissing, Federal Ministry for Digital and Transport, or Edgars Rinkēvičs, State President of Latvia. Minister of the Interior Nancy Faeser said in her opening speech: "We have to keep up with technological progress and the expectations of society. To do this, we need a spirit of innovation and a willingness to reform". We at the Lab for Digitalisation could not agree more.

The partners of the TAQO-PAM project held their 6th cosortium meeting at Eviden in the beautiful city of Tübingen to exchange ideas and discuss the results of the last 6 months. Hila Safi, Maja Franz, Lukas Schmidbauer, Simon Thelen and Tom Krüger presented their latest results to the consortium.

Dr. Hans-Peter Nollert from the university of Tübingen gave a great talk on the origins of Eviden and on visualizations of special and general relativity. After some great food in the "Wurstküche", we went on a city tour as the final event of the day where we did not allow the rain to dampen our mood.

At this year's Linux Plumbers Conference, our PhD Student Benno Bielmeier gave a talk on his work on probabilistic real-time analyses and presented it to a broader audience. With his techniques, Benno tries to probabilistically predict real-time behaviour of complex software systems. His talk met high interest of Linux Kernel developers and ended in a fruitful discussion with core kernel developers. Congrats, Benno!

Abstract Ensuring temporal correctness of real-time systems is challenging. The level of difficulty is determined by the complexity of hardware, software, and their interaction. Real-time analysis on modern complex hardware platforms with modern complex software ecosystems, such as the Linux kernel with its userland, is hard or almost impossible with traditional methods like formal verification or real-time calculus. We need new techniques and methodologies to analyse real-time behaviour and validate real-time requirements.

In this talk, we present a toolkit designed to evaluate the probabilistic Worst-Case Execution Time (pWCET) of real-time Linux systems. It utilises a hybrid combination of traditional measurement-based and model-based techniques to derive execution time distributions considering variability and uncertainty in real-time tasks. This approach provides assessment of execution time bounds and supports engineers to achieve fast and robust temporal prediction of their real-time environments.

Our framework models runtime behaviour and predicts WCET in a streamlined four-phase process: (1) model relevant aspects of the system as finite automaton, (2) instrument the system and measure latencies within the model, (3) generate a stochastic model based on semi-Markov chains, and (4) calculate pWCET via extreme value statistics. This method is applicable across system context boundaries without being tied to specific platforms, infrastructure or tracing tools.

The framework requires injecting tracepoints to generate a lightweight sequence of timestamped events. This can be done by existing Linux tracing mechanisms, for instance, BPF or ftrace. Benefits include significantly reduced WCET measurement duration from days to minutes, dramatically accelerating development cycles for open-source systems with frequent code updates like Linux. This efficiency doesn't compromise accuracy; our hybrid approach ensures robust temporal predictions, enabling developers to quickly assess real-time implications of changes and maintain system performance.

In our talk, we outline the steps taken towards this new evaluation method and discuss the limitations and potential impacts on the development process. We invite interaction from the community to discuss the benefits and limitations of this approach. Our goal is to refine this toolkit to enhance its utility for Linux kernel developers and maintainers, ultimately contributing to a more efficient and effective development process for real-time systems.

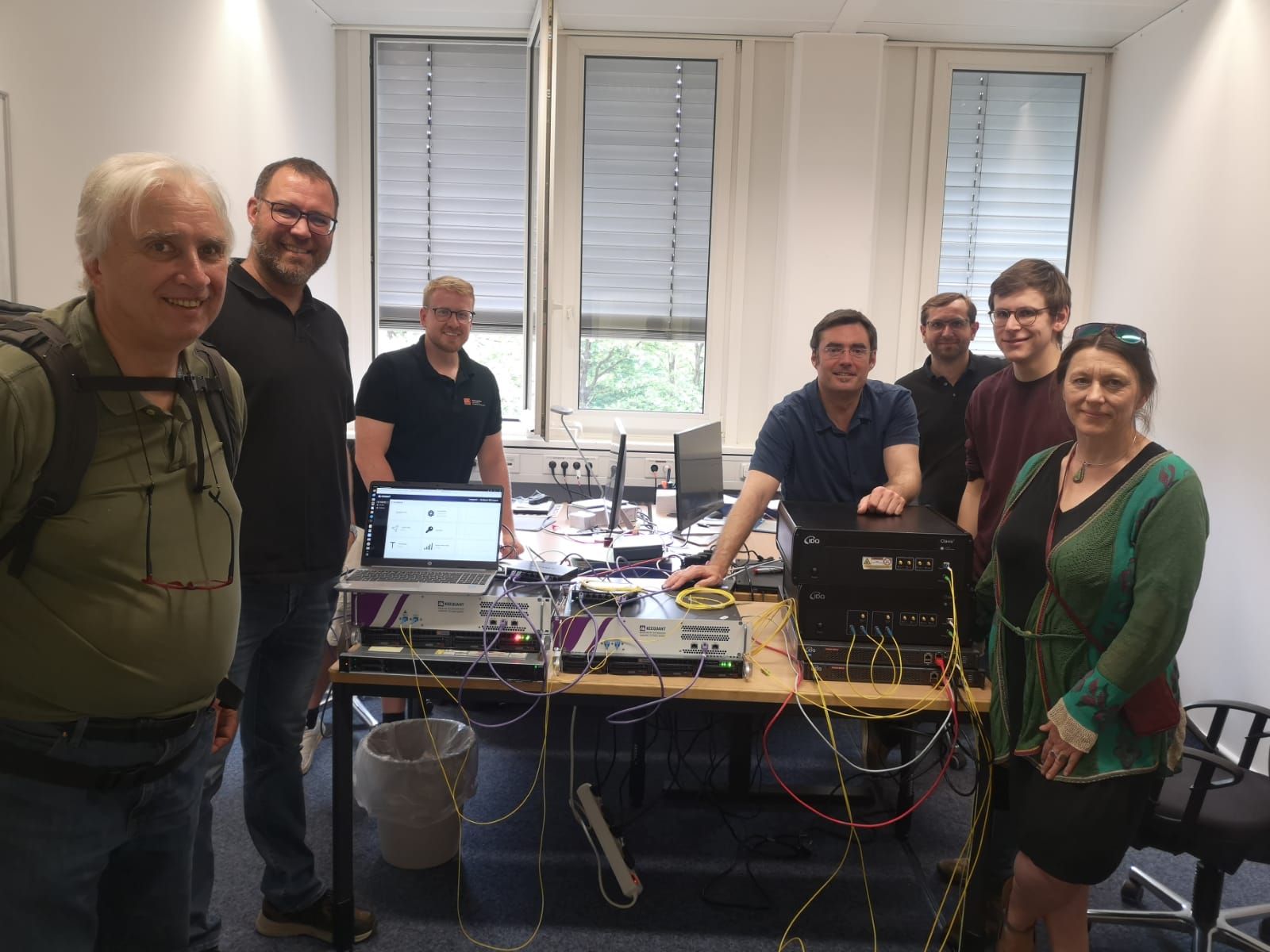

For one final time, the QLindA consortium has met at OTH Regensburg, to review results and milestones achieved during the project's over three-year lifespan.

These milestones encompass a broad selection of publications on quantum machine learning for industrial applications, jointly organised workshops at the IEEE Conference on Quantum Computing and Engineering, and an extensive software library, among others.

Fitting to the occasion, a beer barrel was opened later in the evening, to properly celebrate the project's conclusion. Prost!

We are happy that all our full paper submissions to IEEE QCE, one of the leading quantum computing conferences, have been accepted:

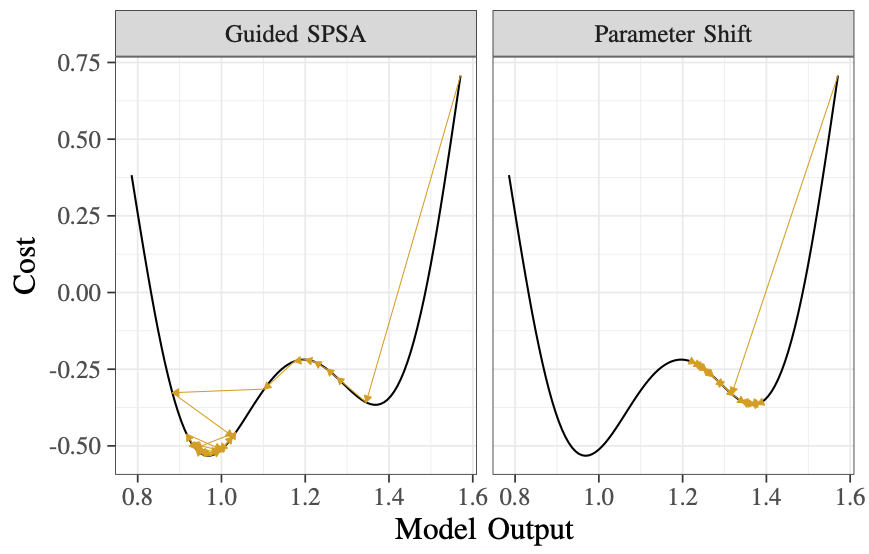

- Maniraman Periyasamy, Axel Plinge, Christopher Mutschler, Daniel D. Scherer, Wolfgang Mauerer: Guided-SPSA: Simultaneous Perturbation Stochastic Approximation assisted by the Parameter Shift Rule

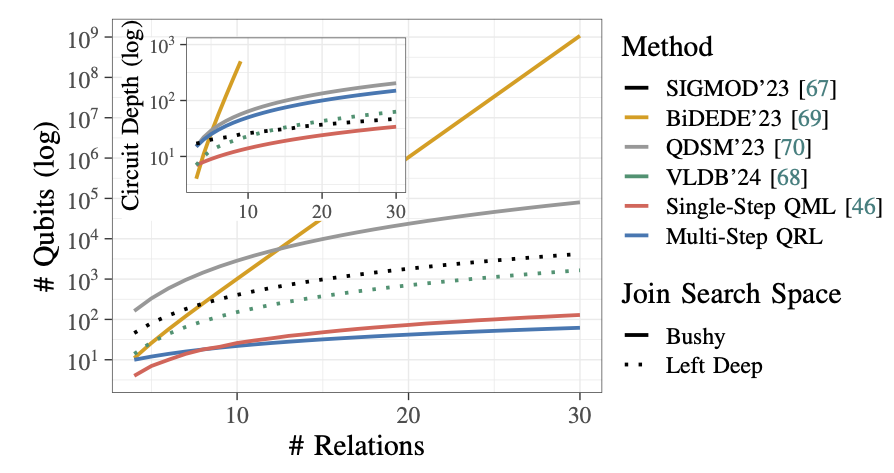

- Maja Franz, Tobias Winker, Sven Groppe, Wolfgang Mauerer: Hype or Heuristic? Quantum Reinforcement Learning for Join Order Optimisation

Abstract The study of variational quantum algorithms (VQCs) has received significant attention from the quantum computing community in recent years. These hybrid algorithms, utilizing both classical and quantum components, are well-suited for noisy intermediate-scale quantum devices. Though estimating exact gradients using the parameter-shift rule to optimize the VQCs is realizable in NISQ devices, they do not scale well for larger problem sizes. The computational complexity, in terms of the number of circuit evaluations required for gradient estimation by the parameter-shift rule, scales linearly with the number of parameters in VQCs. On the other hand, techniques that approximate the gradients of the VQCs, such as the simultaneous perturbation stochastic approximation (SPSA), do not scale with the number of parameters but struggle with instability and often attain suboptimal solutions. In this work, we introduce a novel gradient estimation approach called Guided-SPSA, which meaningfully combines the parameter-shift rule and SPSA-based gradient approximation. The GuidedSPSA results in a 15% to 25% reduction in the number of circuit evaluations required during training for a similar or better optimality of the solution found compared to the parameter-shift rule. The Guided-SPSA outperforms standard SPSA in all scenarios and outperforms the parameter-shift rule in scenarios such as suboptimal initialization of the parameters. We demonstrate numerically the performance of Guided-SPSA on different paradigms of quantum machine learning

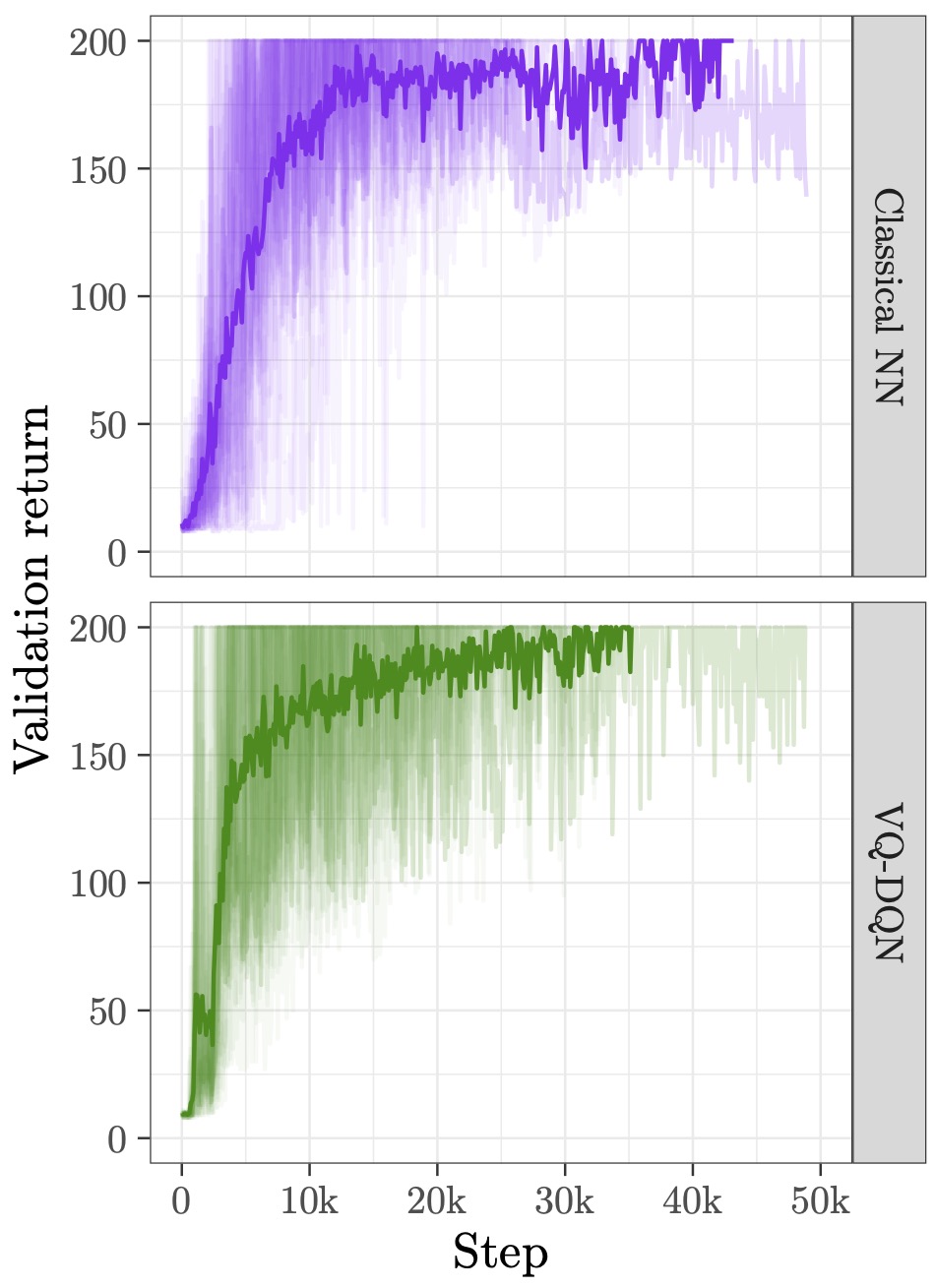

Abstract Identifying optimal join orders (JOs) stands out as a key challenge in database research and engineering. Owing to the large search space, established classical methods rely on approximations and heuristics. Recent efforts have successfully explored reinforcement learning (RL) for JO. Likewise, quantum versions of RL have received considerable scientific attention. Yet, it is an open question if they can achieve sustainable, overall practical advantages with improved quantum processors. In this paper, we present a novel approach that uses quantum reinforcement learning (QRL) for JO based on a hybrid variational quantum ansatz. It is able to handle general bushy join trees instead of resorting to simpler left-deep variants as compared to approaches based on quantum(-inspired) optimisation, yet requires multiple orders of magnitudes fewer qubits, which is a scarce resource even for post-NISQ systems. Despite moderate circuit depth, the ansatz exceeds current NISQ capabilities, which requires an evaluation by numerical simulations. While QRL may not significantly outperform classical approaches in solving the JO problem with respect to result quality (albeit we see parity), we find a drastic reduction in required trainable parameters. This benefits practically relevant aspects ranging from shorter training times compared to classical RL, less involved classical optimisation passes, or better use of available training data, and fits data-stream and low-latency processing scenarios. Our comprehensive evaluation and careful discussion delivers a balanced perspective on possible practical quantum advantage, provides insights for future systemic approaches, and allows for quantitatively assessing trade-offs of quantum approaches for one of the most crucial problems of database management systems.

Multiple contributions to IEEE QCE, IEEE QSW and ACM SIGMOD conferences

We are happy that a whole lot of submissions have been met with favourable response by reviewers recently:

- Quantum Data Encoding Patterns and their Consequences at the QDSM workshop at ACM SIGMOD by Martin Gogeißl, Hila Safi, and Wolfgang Mauerer

- Polynomial Reduction Methods and their Impact on QAOA Circuits at IEEE QSW by Lukas Schmidbauer and Wolfgang Mauerer, together with Karen Wintersberger (Siemens Technology) and Elisabeth Lobe (DLR)

- QCEDA: Using Quantum Computers for EDA at SAMOS'24, together with Matthias Jung (Uni Würzburg), Sven O. Krumke (RPTU Kaiserslautern), Christoph Schroth (Fraunhofer IESE), and Elisabeth Lobe (DLR)

- The second edition of the QML workshop at IEEE QCE, together with Daniel Hein (Siemens Technology)

- Our tutorial on quantum annealing for database optimisation at IEEE QCE'24, together with many colleagues from Lübeck and Helsinki, right from the leading places for research on quantum data management.

Thanks to all the involved group members and collaborators for the hard and exciting work!

Joint contribution to the 27th International Conference on Computing in High Energy & Nuclear Physics (CHEP) by Maja Franz, Manuel Schönberger and Wolfgang Mauerer, together with international partners:

- Melvin Stobl, Eileen Kuehn and Achim Streit (Karlsruhe Institute of Technology)

- Pia Zurita (Complutense University of Madrid, Spain)

- Markus Diefenthaler (Jefferson Lab, USA)

Noisy intermediate-scale quantum (NISQ) computers, while limited by imperfections and small scale, hold promise for near-term quantum advantages in nuclear and high-energy physics (NHEP) when coupled with co-designed quantum algorithms and special-purpose quantum processing units. Developing co-design approaches is essential for near-term usability, but inherent challenges exist due to the fundamental properties of NISQ algorithms. In this contribution we therefore investigate the core algorithms, which can solve optimisation problems via the abstraction layer of a quadratic Ising model or general unconstrained binary optimisation problems (QUBO), namely quantum annealing (QA) and the quantum approximate optimisation algorithm (QAOA). Applications in NHEP utilising QUBO formulations range from particle track reconstruction, over job scheduling on computing clusters to experimental control. While QA and QAOA do not inherently imply quantum advantage, QA runtime for specific problems can be determined based on the physical properties of the underlying Hamiltonian, albeit it is a computationally hard problem itself.

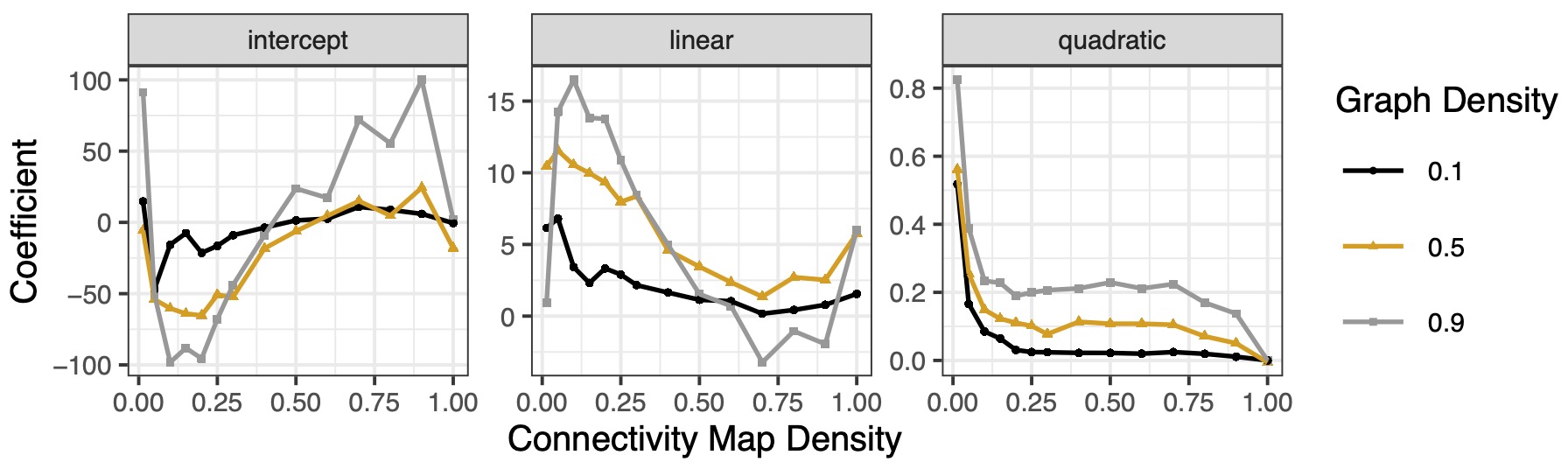

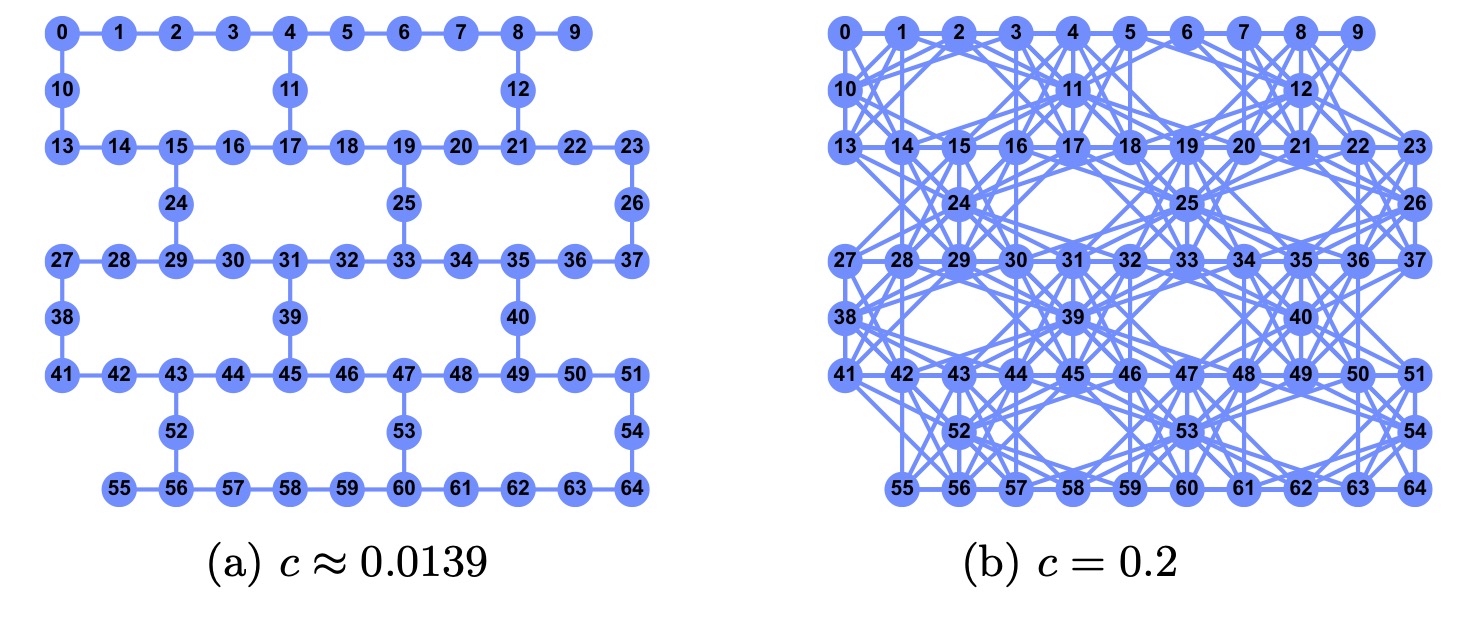

Our primary focus is on two key areas: Firstly, we estimate runtimes and scalability for common NHEP problems addressed via QUBO formulations by identifying minimum energy solutions of intermediate Hamiltonian operators encountered during the annealing process. Secondly, we investigate how the classical parameter space in the QAOA, together with approximation techniques such as a Fourier-analysis based heuristic, proposed by Zhou et al. (2018), can help to achieve (future) quantum advantage, considering a trade-off between computational complexity and solution quality. Our computational analysis of seminal optimisation problems suggests that only lower frequency components in the parameter space are of significance for deriving reasonable annealing schedules, indicating that heuristics can offer improvements in resource requirements, while still yielding near-optimal results.

Wolfgang Mauerer, Ralf Ramsauer, Petra Eichenseher and Simon Thelen, together with Prof. Dr. Jürgen Mottok (LaS³, Faculty of Electrical and Information Technologies), paid a visit to MuQuaNet at its site at the University of the Bundeswehr Munich. We are proud to take part in Bavarian research regarding quantum cryptography with our own brand-new quantum key distribution system and are looking forward to opportunities to deepen our relationship with UniBW in the future.

In a prestigous event hosted by LfD, Bavarian State Minister for Science and Arts, Markus Blume, inaugurated the OTH's new quantum key distribution system provided by "Quantum Optics Jena" and funded by "Hightech Agenda Bayern". The system will play an important role in future cross-faculty efforts to integrate quantum cryptography into education, science and industry.

The event featured many key players from the Bavarian quantum science and industry commmunity, including Prof. Dr. Rudolf Gross from Munich Quantum Valley, Dr. Nils gentschen Felde from MuQuaNet, Prof. Dr. Christoph Marquardt from FAU, Laura Schulz from LRZ, Dr. Bettina Heim from OHB, Dr. Sebastian Luber from Infineon, Dr. Christoph Niedermeier from Siemens, Prof. Dr. Helena Liebelt from THD, Dr. Peter Eder from IQM, Dr. Andreas Böhm from Bayern Innovativ, Theresa Schreyer and Imran Khan from Keequant.

The LfD hosted an international, interdisciplinary exchange on quantum computing for nuclear and high-energy physics to discuss challenges and applications.

Guests from the USA, UK, Spain and Germany participated enthusiastically in the workshop and exchanged ideas on the latest advances in quantum computing and quantum machine learning.

We are thrilled to actively contribute to these pioneering efforts!

Markus Schottenhammer und Andreas Fellner verteigigen erfolgreich ihre Bachelorarbeiten: "Automatisierte Übungserfassung und Wiederholungserkennung mithilfe einer Smartwatch" und "Vergleich von Variationalen Quantenschaltkreis-Strukturen für Quanten-Reinforcement Learning". Glückwunsch an Beide!

Vincent Eichenseher successfully defends his Bachelor Thesis "Comparative Analysis of Parameter Selection Heuristics for the Quantum Approximate Optimisation Algorithm". Congrats, Vincent!

Lukas Schmidbauer has joined the team as doctoral student in the field of quantum computing, contributing to the TAQO-PAM project. Welcome, Lukas!

Simon Thelen has joined the team as doctoral student in the field of quantum computing, contributing to the TAQO-PAM project. Welcome, Simon!

Unter Beteiligung des bayerischen Wirtschaftsministers Hubert Aiwanger wurde der Öffentlichkeit an der TechBase in Regensburg ein neues Kompetenzzentrum zum Thema Digitalisierung präsentiert. Bei dem Projekt Digital Innovation Ostbayern (DInO) handelt es sich dabei um eines von drei European Digital Innovation Hubs (EDIH) in Bayern. Die Projektpartner der TH Deggendorf, OTH Regensburg, R-Tech GmbH und die Bayerische KI-Agentur „baiosphere“ unterstützen und beraten im Rahmen dieses Projekts kleinere und mittlere Unternehmen sowie öffentliche Einrichtungen bei ihren digitalen Herausforderungen.

Das Labor für Digitales (LfD) ist dabei aufgrund seiner langjährigen und domänenübergreifenden Expertise verantwortlich für die Schwerpunktthemen Künstliche Intelligenz, maschinelles Lernen und Datenanalyse innerhalb des Projekts. Benno Bielmeier und Wolfgang Mauerer unterstützen anwendungsorientiert bei der praktischen Erprobung von Risiken, der Sicherheit und der Qualitätssicherung von nachhaltigen KI-Konzepten und -Lösungen. Dabei werden Aspekte der Auswirkung auf Wirtschaft, Politik und Gesellschaft holistisch beachtet und eine Brücke zwischen aktuellem Forschungsstand und praktischen Anforderungen geschlagen, um transformative Innovation und Digitalisierung voranzutreiben.

New contribution to the CORE A* VLDB conference

Our leading research on quantum data management, driven by Manuel Schönberger, Immanuel Trummer, head of the Cornell Database Group, and Wolfgang Mauerer, uncovers the potential of quantum computing for databases. In our latest paper, accepted for publication in PVLDB and to be presented at VLDB'24, we derive a novel, tailored encoding method to enable the use of highly optimised, quantum-inspired Fujitsu Digital Annealer hardware to solve large instances of the long-standing join ordering problem.

Abstract

Finding the optimal join order (JO) is one of the most important problems in query optimisation, and has been extensively considered in research and practise. As it involves huge search spaces, approximation approaches and heuristics are commonly used, which explore a reduced solution space at the cost of solution quality. To explore even large JO search spaces, we may consider special-purpose software, such as mixed-integer linear programming (MILP) solvers, which have successfully solved JO problems. However, even mature solvers cannot overcome the limitations of conventional hardware prompted by the end of Moore’s law.

We consider quantum-inspired digital annealing hardware, which takes inspiration from quantum processing units (QPUs). Unlike QPUs, which likely remain limited in size and reliability in the near and mid-term future, the digital annealer (DA) can solve large instances of mathematically encoded optimisation problems today. We derive a novel, native encoding for the JO problem tailored to this class of machines that substantially improves over known MILP and quantum-based encodings, and reduces encoding size over the state-of-the-art. By augmenting the computation with a novel readout method, we derive valid join orders for each solution obtained by the (probabilistically operating) DA. Most importantly and despite an extremely large solution space, our approach scales to practically relevant dimensions of around 50 relations and improves result quality over conventionally employed approaches, adding a novel alternative to solving the long-standing JO problem.

The new supplementary study programme "Quantum Technologies" will start for the first time in winter semester 2023/24!

Quantum technologies hold immense potential for solving the most complex problems that have so far pushed classical computers to their limits. The supplementary course offers students a gentle introduction to this complex topic -- from the basics of fascinating phenomena of quantum mechanics, their exploitation in cryptography to the simulation of quantum circuits and problem formulations for quantum computers. The study programme prepares students in the best possible way for the technology of the future! Registration for the first round is now possible via the website of the Regensburg School for Digital Sciences (RSDS).

The additional study programme is an interdisciplinary initiative of Prof. Dr. Ioana Serban (Faculty of Applied Natural and Cultural Sciences), Prof. Dr. Jürgen Mottok (LaS³, Faculty of Electrical and Information Technologies) and Prof. Dr. Wolfgang Mauerer (LfD, Faculty of Computer Science and Mathematics).

Das neue Zusatzstudium "Quantentechnologien" startet zum ersten Mal im Wintersemester 2023/24!

Quantentechnologien bergen ein imenses Potenzial für die Lösung komplexester Probleme, die klassische Computer bislang an ihre Grenzen bringen. Das Zusatzstudium bietet Studierenden eine sanfte Hinführung zu der komplex erscheinenden Thematik -- angefangen von den Grundlagen faszinierender Phänomene der Quantenmechanik über deren Ausnutzung in der Kryptographie bis hin zur Simulation von Quantenschaltkreisen und Problemformulierungen für Quantencomputer. Damit bereitet das Zusatzstudium Studierende bestmöglich auf die Zukunftstechnologie vor! Die Anmeldung für den ersten Durchlauf ist ab sofort über die Website der Regensburg School for Digital Sciences (RSDS) möglich!

Das Zusatzstudium ist eine interdisziplinäre Initiative von Prof. Dr. Ioana Serban (Fakultät Angewandte Natur- und Kulturwissenschaften), Prof. Dr. Jürgen Mottok (LaS³, Fakultät Elektro- und Informationstechnologien) und Prof. Dr. Wolfgang Mauerer (LfD, Fakultät Informatik und Mathematik).

Wolfgang Mauerer and colleagues from the QLindA consortium hosted a workshop on Quantum Machine Learning (QML) as part of the IEEE Conference on Quantum Computing and Engineering to to discuss challenges and applications of QML in Bellevue, Washington, USA.

International researchers from all over the world participated enthusiastically in the workshop and exchanged ideas on the latest advances in Quantum Machine Learning.

We are thrilled to actively contribute to these pioneering efforts!

The LfD Quantum booth at the World of Quantum Fair from 27th to 30th of June in Munich attracted many curious visitors and ensured a wide range of conversations on the topic.

The corresponding After-Show-Party was well received, sparked engaging discussions about the future of quantum computing and helped initiating new interdisciplinary collaborations.

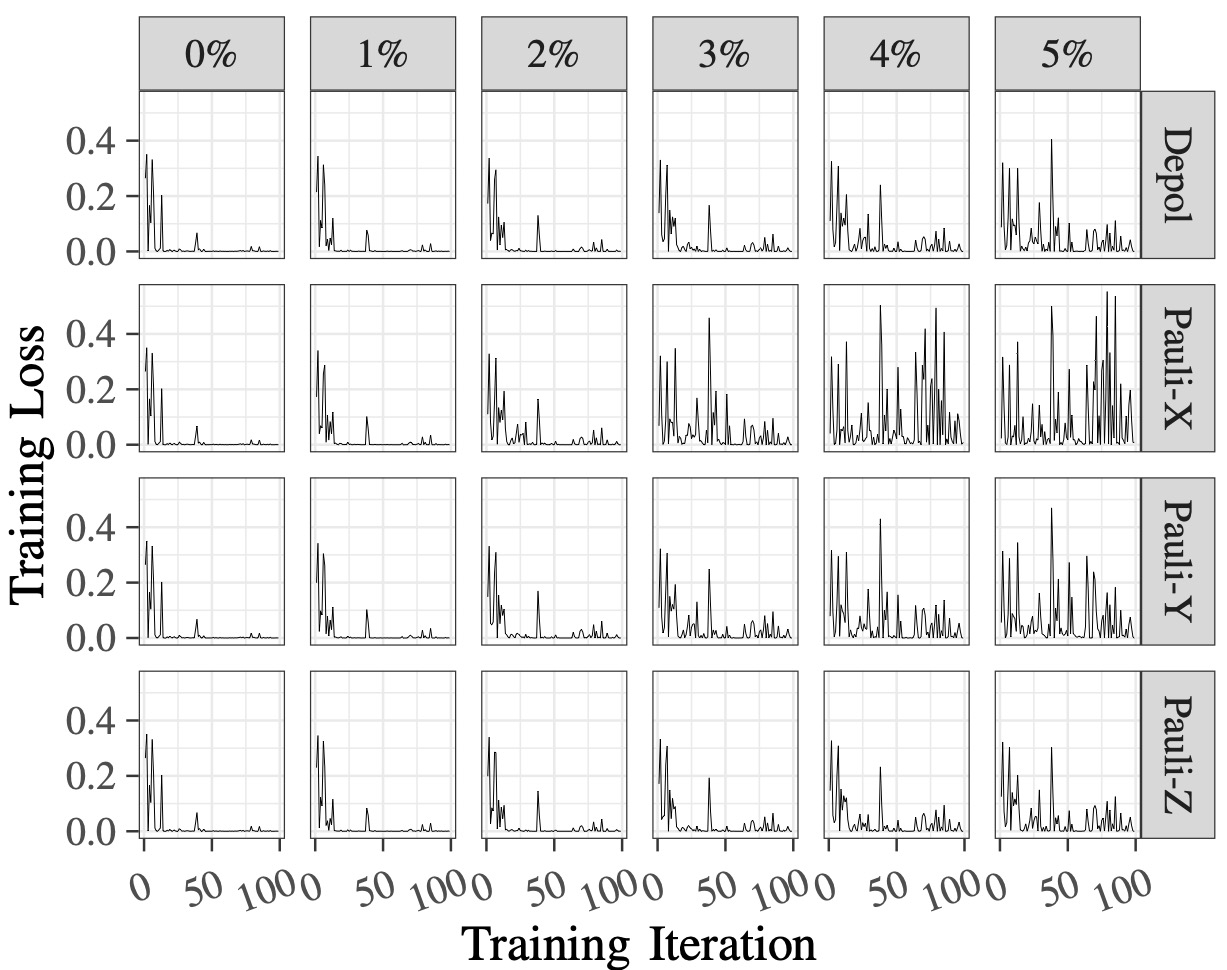

Advancing Quantum Software Engineering at IEEE QSW'23

Two papers by Felix Greiwe, Tom Krüger and Hila Safi, the latter in joint work with Karen Wintersperger, have been accepted at the IEEE Quantum Software Week. They deal with the role of noise and imperfections in quantum software engineering, and uncover generic patterns in the performance of systems optimised by HW/SW co-design approaches. Congrats, Felix, Tom and Hila!

The papers arose of the BMBF sponsored project TAQO-PAM. Of course, both are accompanied by extensive reproduction packages that allow independent researchers to confirm our results.

Our work in developing quantum applications for industry is presented by the Bavarian Ministry of Science as research highlight in Bavaria.

Verband der Elektro- und Digitalindustrie, Arbeitskreis Funktionale Sicherheit ISO 26262 und Untergruppe Software

Abstract

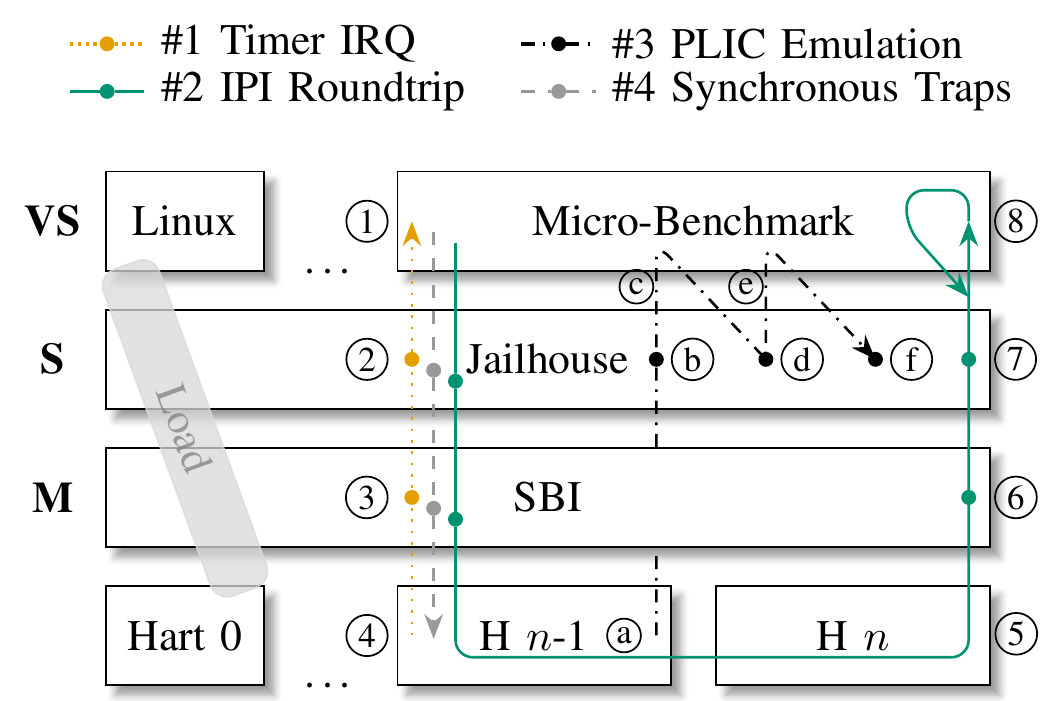

Consolidation of multiple systems of different criticality to one platform of mixed-criticality is an ongoing trend in various embedded industries due to the availability of powerful multicore processors. The isolation of different computing domains is the most crucial factor to guarantee freedom from interference. In this talk, Ralf Ramsauer presents the current state of Static Hardware Partitioning, a technique that leverages virtualisation extensions of modern CPUs to strongly isolate different computing domains on SMP platforms. He shows that it is possible to virtualise embedded real-time systems with (almost) zero runtime overhead and software interaction.

International Workshop on Quantum Data Science and Management organised by Wolfgang Mauerer jointly with Sven Groppe (University of Lübeck), Jiaheng Lu (University of Helsinki), and Le Gruenwald (University of Oklahoma) at the 49th International Conference on Very Large Data Bases.

Goals of the Workshop

For most database researchers, quantum computing and quantum machine lerning are still new research fields. The goal of this workshop is to bring together academic researchers and industry practitioners from multiple disciplines (e.g., database, AI, software, physics, etc.) to discuss the challenges, solutions, and applications of quantum computing and quantum machine learning that have the potential to advance the state of the art of data science and data management technologies. Our purpose is to foster the interaction between database researchers and more traditional quantum disciplines, as well as industrial users. The workshop serves as a forum for the growing quantum computing community to connect with database researchers to discuss the wider questions and applications of how quantum resources can benefit data science and data management tasks, and how quantum software can support this endeavor.

Contribution at the ACM SIGMOD/PODS International Conference on Management of Data by Tobias Winker, Sven Groppe (University of Lübeck), Valter Uotila, Zhengtong Yan, Jiaheng Lu (University of Helsinki), Maja Franz and Wolfgang Mauerer.

Abstract

In the last few years, the field of quantum computing has experienced remarkable progress. The prototypes of quantum computers already exist and have been made available to users through cloud services (e.g., IBM Q experience, Google quantum AI, or Xanadu quantum cloud). While fault-tolerant and large-scale quantum computers are not available yet (and may not be for a long time, if ever), the potential of this new technology is undeniable. Quantum algorithms have the proven ability to either outperform classical approaches for several tasks, or are impossible to be efficiently simulated by classical means under reasonable complexity-theoretic assumptions. Even imperfect current-day technology is speculated to exhibit computational advantages over classical systems. Recent research is using quantum computers to solve machine learning tasks. Meanwhile, the database community already successfully applied various machine learning algorithms for data management tasks, so combining the fields seems to be a promising endeavour. However, quantum machine learning is a new research field for most database researchers. In this tutorial, we provide a fundamental introduction to quantum computing and quantum machine learning and show the potential benefits and applications for database research. In addition, we demonstrate how to apply quantum machine learning to the optimization of the join order problem for databases.

Contribution to the 26th International Conference on Computing in High Energy & Nuclear Physics (CHEP) by Maja Franz, Pia Zurita (University of Regensburg), Markus Diefenthaler (Jefferson Lab) and Wolfgang Mauerer.

Abstract

Quantum Computing (QC) is a promising early-stage technology that offers novel approaches to simulation and analysis in nuclear and high energy physics (NHEP). By basing computations directly on quantum mechanical phenomena, speedups and other advantages for many computationally hard tasks are potentially achievable, albeit both, the theoretical underpinning and the practical realization, are still subject to considerable scientific debate, which raises the question of applicability in NHEP.

In this contribution, we describe the current state of affairs in QC: Currently available noisy, intermediate-scale quantum (NISQ) computers suffer from a very limited number of quantum bits, and are subject to considerable imperfections, which narrows their practical computational capabilities. Our recent work on optimization problems suggests that the Co-Design of quantum hardware and algorithms is one route towards practical utility. This approach offers near-term advantages throughout a variety of domains, but requires interdisciplinary exchange between communities.

To this end, we identify possible classes of applications in NHEP, ranging from quantum process simulation over event classification directly at the quantum level to optimal real-time control of experiments. These types of algorithms are particularly suited for quantum algorithms that involve Variational Quantum Circuits, but might also benefit from more unusual special-purpose techniques like (Gaussian) Boson Sampling. We outline challenges and opportunities in the cross-domain cooperation between QC and NHEP, and show routes towards co-designed systems and algorithms. In particular, we aim at furthering the interdisciplinary exchange of ideas by establishing a joint understanding of requirements, limitations and possibilities.

Planung und Steuerung industrieller Fertigung: Quantum Learning Machine Atos QLM38 kommt im BMBF-Forschungsprojekt TAQO-PAM zum Einsatz.

Vorgezogenes Weihnachtsgeschenk für das Labor für Digitalisierung an der OTH Regensburg: Dort wurde jetzt eine Quantensimulationsanlage im Wert von einer Million Euro angeliefert und installiert. „Solche Hightech-Anlagen stehen üblicherweise in bedeutenden Instituten wie dem Forschungszentrum Jülich, dem Leibnitz Rechenzentrum München, bei der europäischen Organisation für Kernforschung (CERN) und im Munich Quantum Valley“, macht Präsident Prof. Dr. Ralph Schneider die besondere Dimension der Anschaffung deutlich.

Neue Professur im Rahmen der Hightech Agenda Bayern

Das Weihnachtsgeschenk kommt zwar optisch recht unscheinbar daher. Dennoch reiht sich die OTH Regensburg mit der Quantum Learning Machine „Atos QLM38“ ein in die Riege hochkarätiger Forschungsinstitute. Das kommt nicht von Ungefähr. An der Fakultät Informatik und Mathematik sind über Jahre hinweg Kompetenzen im Bereich Quantencomputing aufgebaut worden. Zuletzt hatte der Freistaat Bayern mitgeteilt, dass im Programm zur Stärkung von Quantenprofessuren im Rahmen der Hightech Agenda eine neue Professur für Algorithmik und Quantencomputing-Anwendungen an die OTH Regensburg geht.

Prof. Dr. Wolfgang Mauerer leitet das Labor für Digitalisierung und ist Vorsitzender Direktor des Regensburg Center for Artificial Intelligence (RCAI). Er beschäftigt sich seit mehr als 15 Jahren mit konkreten Anwendungsfällen der Quanteninformatik und gilt hierfür als ausgewiesener Experte. Ihm geht es nicht um den bloßen akademischen Austausch, sondern vor allem darum, die Lücke zwischen Grundlagenforschung und industrieller Anwendung zu schließen.

TAQO-PAM: Starke Partner aus Forschung und Industrie

Diesem Ziel widmet sich auch das von Mauerer ins Leben gerufene Konsortialprojekt TAQO-PAM, das über das Bundesministerium für Bildung und Forschung mit insgesamt 8,2 Millionen Euro gefördert wird. Dabei entfallen alleine 2,6 Millionen Euro auf die OTH Regensburg. Partner sind BMW München, Siemens München und Karlsruhe, die Regensburger Optware GmbH, die Friedrich-Alexander-Universität Erlangen-Nürnberg und Atos Scientific Computing (Tübingen).

Die zunehmende Massenproduktion individualisierter Güter und die dafür notwendige komplexe Logistik innerhalb moderner Fabriken erfordern die Lösung umfangreicher Optimierungsprobleme in Echtzeit. „Klassische Computer können solche Probleme nicht ausreichend gut und schnell verarbeiten; auch mit Quantencomputern ist die Machbarkeit nicht selbstverständlich“, bemerkt Mauerer. Im Projekt sollen unter seiner Führung daher hybride, quanten-klassische Spezialalgorithmen entworfen werden. Diese befähigen die demnächst verfügbaren Quantencomputer mit einigen 10 Qubits zur Lösung dieser Probleme beizutragen. Dies erfolgt durch die Integration von angepassten Quantenprozessoren (QPUs) in bestehende Szenarien und durch Erweiterung bestehender Methoden der Fabrikautomation und Produktionsplanung.

Stärkung des Hightech-Standorts Regensburg

"Das ist ein Paradebeispiel für die starke anwendungsorientierte und zukunftsgerichtete Forschung an unserer Hochschule", sind sich Präsident Schneider und Prof. Dr. Frank Herrmann, Dekan der Fakultät Informatik und Mathematik, einig. Sinnbildlich dafür steht das millionenschwere Weihnachtspaket mit dem Hochleistungsrechner. Ralph Schneider sieht darin auch eine Stärkung des Hightech-Standorts Regensburg. Längst nicht jede Hochschule und jede industrielle Forschungseinrichtung könne das nötige Fachwissen und die Ressourcen für die Nutzung einer Quantum Learning Machine bieten. In der Regel seien nicht nur Neueinsteiger in den Bereich des Quantencomputings auf einen teuren Zukauf von Rechenzeiten in großen Rechenzentren angewiesen.

Link zur Pressemitteilung der OTH Regensburg

©Fotos: OTH Regensburg/Michael Hitzek

At the Critical System Summit in Yokohama, Benno Bielmeier and Wolfgang Mauerer presented in one of six sessions a semiformal approach to deriving statements about the runtime behaviour of complex, mixed-criticality systems.

The presentation was recorded and can be found on YouTube.

As part of the Open Source Summit Japan, the event was hosted by the Linux Foundation and its corporate members, among them AT&T, Cisco, Fujitsu, Google, Hitachi, Huawei, IBM, Intel, Meta, Microsoft, NEC and many others, with more than 600 participants.

The approach links theoretical formalisms with empirically collected data from real-world applications and aims to remain interpretable and tangible. Its idea is to augment a simplified, formal model based on a priori knowledge about the system's intrinsics with empirical information from measurements on real-world scenarios, which then allows us to infer properties of interest for the certification of safety-critical systems.

Tom Krüger has joined the team as doctoral student in the field of quantum computing, contributing to the TAQO-PAM project. Welcome, Tom!

Wolfgang Mauerer, Ralf Ramsauer and Andrej Utz present results of the iDev 4.0 project at the SEMICON Europa 2022 in Munich.

Abstract: The advent of multi-core CPUs in nearly all embedded markets has prompted an architectural trend towards combining safety critical and uncritical software on single hardware units. We present an architecture for mixed-criticality systems based on Linux that allows for the consolidation of critical and uncritical components onto a single hardware unit.

In the context of the iDev 4.0 project, the use-case of this technological building block is to reduce the overall amount of distributed computational hardware components accross semiconductor assembly lines in fabs.

CPU virtualisation extensions enable strict and static partitioning of hardware by direct assignment of resources, which allows us to boot additional operating systems or bare metal applications running aside Linux. The hypervisor Jailhouse is at the core of the architecture and ensures that the resulting domains may serve workloads of different criticality and can not interfere in an unintended way. This retains Linux’s feature-richness in uncritical parts, while frugal safety and real-time critical applications execute in isolated domains. Architectural simplicity is a central aspect of our approach and a precondition for reliable implementability and successful certification.

In this work, we present our envisioned base system architecture, and elaborate implications on the transition from existing legacy systems to a consolidated environment.

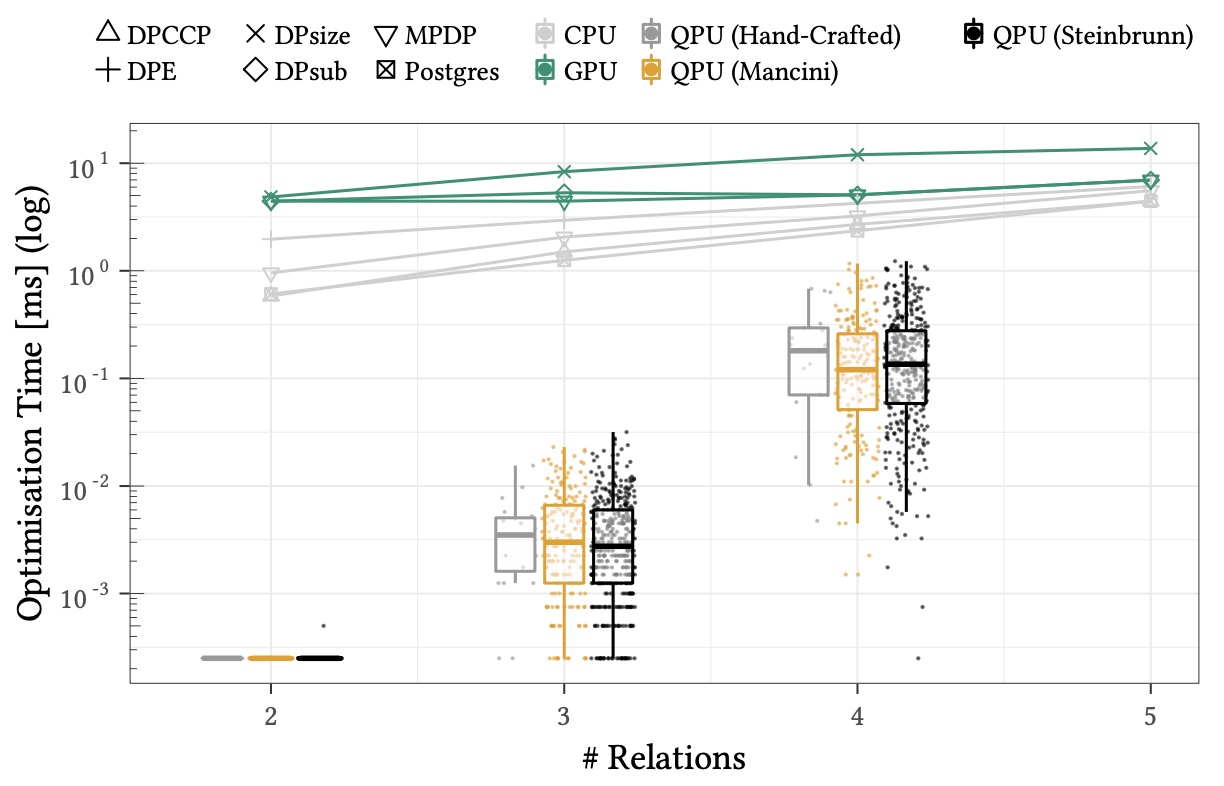

Contribution to CORE A* conference ACM SIGMOD driven by Manuel Schönberger and Wolfgang Mauerer breaks new ground for quantum computing in the database community (PDF).

Abstract: The prospect of achieving computational speedups by exploiting quantum phenomena makes the use of quantum processing units (QPUs) attractive for many algorithmic database problems. Query optimisation, which concerns problems that typically need to explore large search spaces, seems like an ideal match for the known quantum algorithms. We present the first quantum implementation of join ordering, which is one of the most investigated and fundamental query optimisation problems, based on a reformulation to quadratic binary unconstrained optimisation problems. We empirically characterise our method on two state-of-the-art approaches (gate-based quantum computing and quantum annealing), and identify speed-ups compared to the best know classical join ordering approaches for input sizes that can be processed with current quantum annealers. However, we also confirm that limits of early-stage technology are quickly reached.

Current QPUs are classified as noisy, intermediate scale quantum computers (NISQ), and are restricted by a variety of limitations that reduce their capabilities as compared to ideal future quantum computers, which prevents us from scaling up problem dimensions and reaching practical utility. To overcome these challenges, our formulation accounts for specific QPU properties and limitations, and allows us to trade between achievable solution quality and possible problem size.

In contrast to all prior work on quantum computing for query optimisation and database-related challenges, we go beyond currently available QPUs, and explicitly target the scalability limitations: Using insights gained from numerical simulations and our experimental analysis, we identify key criteria for co-designing QPUs to improve their usefulness for join ordering, and show how even relatively minor physical architectural improvements can result in substantial enhancements. Finally, we outline a path towards practical utility of custom-designed QPUs.

Felix Greiwe has joined the group as doctoral student in the field of quantum computing, contributing to the TAQO-PAM project. Welcome, Felix!

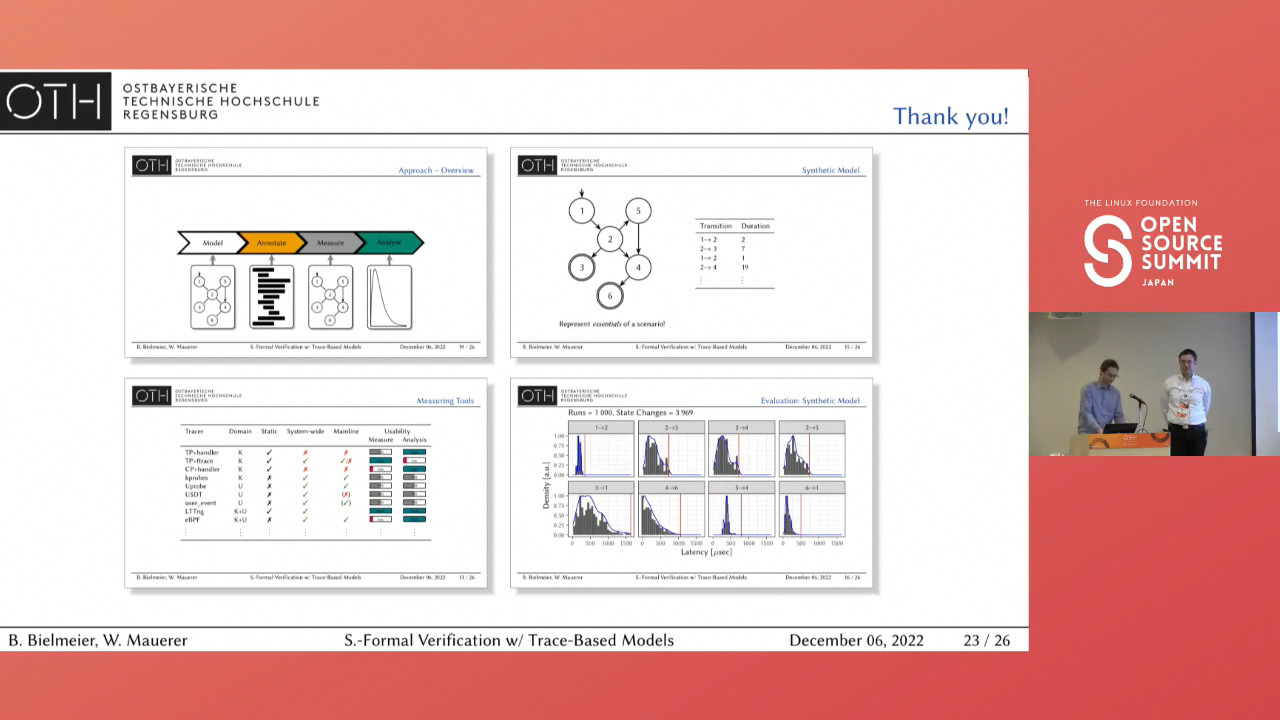

Contribution to the highly competitive Open Source Summit (with acceptance rates below 20%) in Yokohama, Japan by Benno Bielmeier and Wolfgang Mauerer.

Abstract: Software for safety-critical systems must meet strict functional and temporal requirements. Since it is impossible to exhaustively test the required qualities, formal verification techniques have been devised. However, these approaches are usually only applicable to small systems, and require software architecture and development to consider verification goals from the ground up. Safety-critical systems face an increasing demand for functionality, and need to handle the associated complexity. While the desired functionalities can be satisfied by embedded Linux, established verification techniques fail for code of such magnitude. We show a semi-formal, model-based approach to derive reliable statements about the run-time characteristic of embedded Linux. Using a-priori expert knowledge, we generate a finite automaton-based effective description of safety-relevant aspects. The real-world, system-dependent behaviour of the resulting automata, in particular timing statistics for state transitions, is empirically obtained via system instrumentation. We then show how to turn this into (statistical) guarantees on their behaviour. We show how this allows to draw conclusions that can be used in certifying systems in terms of reliability, latencies, and other real-time properties.

QPU-System Co-Design for Quantum HPC Accelerators (with contributions by Hila Safi and Wolfgang Mauerer) was accepted by the 35th GI/ITG International Conference on the Architecture of Computing Systems (PDF).

Abstract: The use of quantum processing units (QPUs) promises speed-ups for solving computational problems, but the quantum devices currently available possess only a very limited number of qubits and suffer from considerable imperfections. One possibility to progress towards practical utility is to use a co-design approach: Problem formulation and algorithm, but also the physical QPU properties are tailored to the specific application. Since QPUs will likely be used as accelerators for classical computers, details of systemic integration into existing architectures are another lever to influence and improve the practical utility of QPUs.

In this work, we investigate the influence of different parameters on the runtime of quantum programs on tailored hybrid CPU-QPU-systems. We study the influence of communication times between CPU and QPU, how adapting QPU designs influences quantum and overall execution performance, and how these factors interact. Using a simple model that allows for estimating which design choices should be subjected to optimisation for a given task, we provide an intuition to the HPC community on potentials and limitations of co-design approaches. We also discuss physical limitations for implementing the proposed changes on real quantum hardware devices.

The paper is joint work with with Siemens Technology, and was performed within the BMBF sponsored project TAQO-PAM. A reproduction package allows independent researchers to confirm our results.

Uncovering Instabilities in Variational-Quantum Deep Q-Networks (with contributions by Maja Franz, Lucas Wolf and Wolfgang Mauerer) was accepted by the Journal of the Franklin Institute (Impact Factor: 4.25)

Abstract: Deep Reinforcement Learning (RL) has considerably advanced over the past decade. At the same time, state-of-the-art RL algorithms require a large computational budget in terms of training time to converge. Recent work has started to approach this problem through the lens of quantum computing, which promises theoretical speed-ups for several traditionally hard tasks. In this work, we examine a class of hybrid quantum- classical RL algorithms that we collectively refer to as variational quantum deep Q-networks (VQ-DQN). We show that VQ-DQN approaches are subject to instabilities that cause the learned policy to diverge, study the extent to which this afflicts reproduciblity of established results based on classical simulation, and perform systematic experiments to identify potential explanations for the observed instabilities. Additionally, and in contrast to most existing work on quantum reinforcement learning, we execute RL algorithms on an actual quantum processing unit (an IBM Quantum Device) and investigate differences in behaviour between simulated and physical quantum systems that suffer from implementation deficiencies. Our experiments show that, contrary to opposite claims in the literature, it cannot be conclusively decided if known quantum approaches, even if simulated without physical imperfections, can provide an advantage as compared to classical approaches. Finally, we provide a robust, universal and well-tested implementation of VQ-DQN as a reproducible testbed for future experiments.

The paper ist joint work with with Fraunhofer IIS, and arose of the BMBF sponsored project QLindA. Of course, the publication is accompanied by an extensive reproduction package that allows independent researchers to confirm our results.

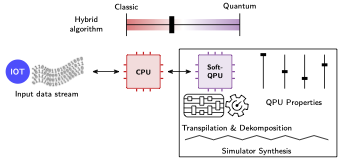

OTH Regensburg ist Konsortialführer in 3 Millionen-EUR-Leuchtturmprojekt Q-Stream

Ein federführend von der OTH Regensburg eingebrachter Vorschlag für ein Quanten-Leuchtturmprojekt im Munich Quantum Valley ist nach dem Votum einer international besetzten Expertenkommission zur Förderung ausgewählt worde. Der Projekt Q-Stream: Quantum-Accelerated Data Stream Analytics, das sich mit der Konstruktion hybrider quanten-klassischer Spezialhardware beschäftigen wird, die auf die Analyse von Datenströmen spezialisiert ist.

Das Projekt zielt darauf ab, mittels eines Hardware-Software-Codesign-Ansatzes Anwendungen für die aktuell vorhandene Generation von Quantencomputern zu finden, die aufgrund technischer Imperfektionen noch zahlreiche Unzulänglichkeiten und Störungen aufweisen, die ihre potentiell enorme Leistungsfähigkeit noch nicht zur vollen Entfaltung bringen.

Von der Gesamt-Fördersumme vom 2.98 Millionen entfallen rund 750,000 EUR auf die OTH Regensburg, die zudem eine Stelle aus der High-Tech-Agenda in der Projekt einbringt. Das Labor für Digitalisierung wird sich in den Arbeiten auf den konzeptionellen Entwurf problemspezifisch adaptierter Spezial-Rechner konzentrieren. Weitere Quantenkompetenzen werden vom Fraunhofer-Institut für integrierte Schaltungen (IIS) (Transpilation und Dekomposition von Quantenschaltkreisen) sowie der Technischen Hochschule Deggendorf (Vorabsimulation zukünftiger Quantenrechner auf klassischen HPC-Systemen) eingebracht.

Dass es die OTH Regensburg neben sechs bayerischen Universitäten, der Max-Planck- und der Fraunhofer-Gesellschaft sowie der Bayerischen Akademie der Wissenschaften auf die Liste der geförderten Einrichtungen geschafft hat, scheint auch eine Bestätigung für die langjährigen Quanten-Aktivitäten in Forschung und Lehre von Prof. Dr. Wolfgang Mauerer zu sein.

Informationen des Bayerischen Staatsministeriums für Wissenschaft und Kunst, von dem das Labor die Förderung dankbar entgegennimmt, finden sich in einer Pressemitteilung.

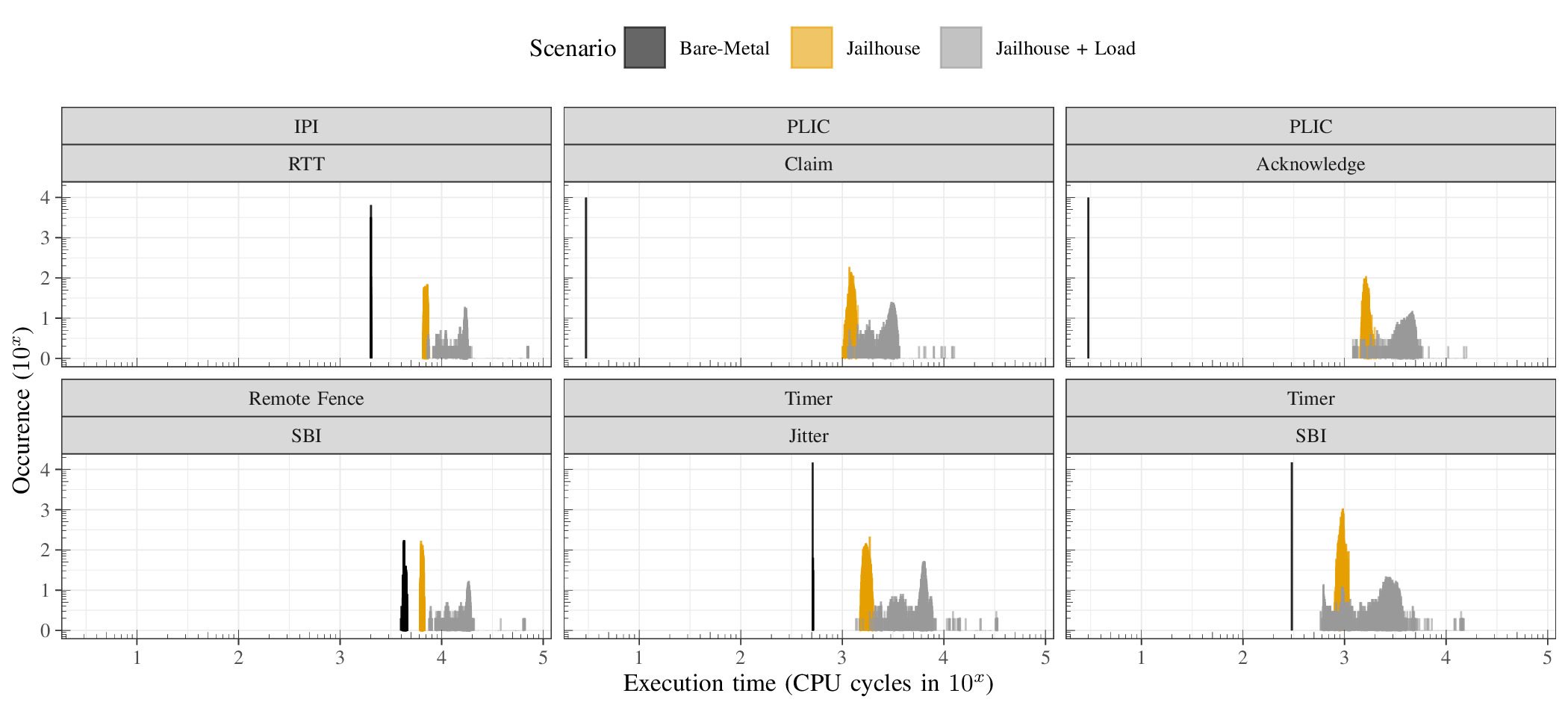

Static Hardware Partitioning on RISC-V - Shortcomings, Limitations, and Prospects was accepted by the IEEE IoT Forum Special Session: Virtualization for IoT Devices 2022.

Abstract: On embedded processors that are increasingly equipped with multiple CPU cores, static hardware partitioning is an established means of consolidating and isolating workloads onto single chips. This architectural pattern is suitable for mixed-criticality workloads that need to satisfy both, real-time and safety requirements, given suitable hardware properties.

In this work, we focus on exploiting contemporary virtualisation mechanisms to achieve freedom from interference respectively isolation between workloads. Possibilities to achieve temporal and spatial isolation-while maintaining real-time capabilities-include statically partitioning resources, avoiding the sharing of devices, and ascertaining zero interventions of superordinate control structures.

This eliminates overhead due to hardware partitioning, but implies certain hardware capabilities that are not yet fully implemented in contemporary standard systems. To address such hardware limitations, the customisable and configurable RISC-V instruction set architecture offers the possibility of swift, unrestricted modifications.

We present findings on the current RISC-V specification and its implementations that necessitate interventions of superordinate control structures. We identify numerous issues adverse to implementing our goal of achieving zero interventions respectively zero overhead: On the design level, and especially with regards to handling interrupts. Based on micro-benchmark measurements, we discuss the implications of our findings, and argue how they can provide a basis for future extensions and improvements of the RISC-V architecture.

Ralf Ramsauer, Stefan Huber and Wolfgang Mauerer will discuss Zero-Overhead Virtualisation: It's a Trap! at the Embedded Linux Conference in Dublin

Abstract: Embedded processors are increasingly equipped with powerful CPU cores. For mixed-criticality scenarios with multiple independent real-time appliances, this allows us to consolidate formerly distributed systems. This requires absence of unintended interaction between different computing domains, which can be achieved by exploiting virtualisation extensions of modern CPUs. Though providing strong isolation guarantees, virtualisation comes with an overhead, which may endanger global real-time properties of the system. The statically partitioning, Linux-based hypervisor Jailhouse addresses this challenge and strives at zero-overhead virtualisation, which maintains real-time capabilities of the platform by design. However, limitations of current architectures counter our architectural design goal of eliminating virtualisation overheads. In this talk, we extract architecture-independent common requirements on contemporary platforms to enable zero-trap virtualisation. We explore and compare the ARM, x86, and the RISC-V architecture, and inspect their architectural limitations for embedded zero-overhead virtualisation. We present common pitfalls and barriers of those platforms: Issues that have been addressed, that are being fixed and that need to be addressed in future.

Die Bayerische Forschungsallianz hat Gastwissenschaftleraufenthalte an der Tokio University of Science bewilligt. Prof. Dr. Wolfgang Mauerer wird seine Systems-Expertise in ein Projekt zur statistischen Analyse von Echtzeitgarantien einbringen.

PhD student Manuel Schönberger took second place in the graduate track of this year's Student Research Competition at the CORE A* SIGMOD conference in Philadelphia!

The Student Research Competition takes place annually for various ACM conferences including SIGMOD. In the first round, students submit an extended abstract about their research. Based on the quality of their submission, a select few students from universities around the globe, including Columbia University, University of Illinois at Urbana-Champaign, Hasso-Plattner-Institut and TUM were invited to present their research posters at the SIGMOD conference. Three students were selected for the third round, where they gave a more detailed presentation on their research. In the graduate category, Manuel reached the second place, competing against Alex Yao and Sughosh Kaushik, both from Columbia University, who took first and third places respectively.

In his research, Manuel analyses the applicability of quantum computing on database query processing. The research goes beyond merely mapping problems onto quantum hardware, and moreover addresses the co-design of future quantum systems, such that they become tailor-made for database problems. Congrats, Manuel, for achieving this international recognition!

Quantum technologies are on the verge of breaking out of their ivory tower existence and entering the general marketplace.

With the premier of the World of Quantum researchers and industry presented the latest findings on potential quantum applications and quantum hardware at the Laser World of Photonics in Munich. Research Master student Maja Franz and others visited the fair and explored the new platform for quantum technologies.

Exhibitors from industry and manufacturers of quantum computers gave a broad overview of current quantum technology, for instance IBM Quantum let visitors see inside its quantum computer via augmented reality. Researchers in the field of quantum computing, such as from Fraunhofer IKS, offered a lively exchange on hybrid quantum-classical algorithms.

Thanks to the sponsors of the Bayerisches Staatsministerium für Digitales and other partners the World of Quantum was a success and an interesting experience.

State Minister announces extension of KI-Transfer Plus project headed by Wolfgang Mauerer

In the state-funded project "KI-Transfer Plus", AI regional centers such as the Regensburg Center for Artificial Intelligence (RCAI) support SMEs in getting started with artificial intelligence. At the closing event, Bavarian digital minister Judith Gerlach reviewed results of the first project round. The host of the event, Horsch Maschinen GmbH from Schwandorf, showed how artificial intelligence enhances its own agricultural machinery. Horsch developed an algorithm to recognize plants and their center points, which is important for autonomous driving in the field as well as for automated weed removal. The other five project participants from Upper Bavaria and the Upper Palatinate also presented innovative AI developments in a wide range of domains. Digital minister Judith Gerlach was pleased with the results and announced the expansion of the project. As a consequence of the program's success, Gerlach announced an extension for another year to prepare the Bavarian economy for the key technologies of the future. See a summary video of the impressive work engineers Nicole Höß and Matthias Melzer did together with our industry partners!

Masterand Mario Mintel stellt seine Arbeiten zur Adressraumduplikation mit dem von ihm entworfenen Scoot-Mechanismus auf der FGDB'22 in Hamburg vor.

1-2-3 Reproducibility for Quantum Software Experiments was accepted at Q-SANER 2022.

Abstract: Various fields of science face a reproducibility crisis. For quantum software engineering as an emerging field, it is therefore imminent to focus on proper reproducibility engineering from the start. Yet the provision of reproduction packages is almost universally lacking. Actionable advice on how to build such packages is rare, particularly unfortunate in a field with many contributions from researchers with backgrounds outside computer science. In this article, we argue how to rectify this deficiency by proposing a 1-2-3~approach to reproducibility engineering for quantum software experiments: Using a meta-generation mechanism, we generate DOI-safe, long-term functioning and dependency-free reproduction packages. They are designed to satisfy the requirements of professional and learned societies solely on the basis of project-specific research artefacts (source code, measurement and configuration data), and require little temporal investment by researchers. Our scheme ascertains long-term traceability even when the quantum processor itself is no longer accessible. By drastically lowering the technical bar, we foster the proliferation of reproduction packages in quantum software experiments and ease the inclusion of non-CS researchers entering the field.

QSAP@INFORMATIK 2022: "Workshop on quantum software and applications" was accepted at the annual conference of the German Gesellschaft für Informatik (GI) and will take place in September. It is co-organised by Stefanie Scherzinger (University of Passau) and Wolfgang Mauerer.

Beyond the Badge: Reproducibility Engineering as a Lifetime Skill was accepted at the SEENG@ICSE 2022.

Abstract: Ascertaining reproducibility of scientific experiments is receiving increased attention across disciplines. We argue that the necessary skills are important beyond pure scientific utility, and that they should be taught as part of software engineering (SWE) education. They serve a dual purpose: Apart from acquiring the coveted badges assigned to reproducible research, reproducibility engineering is a lifetime skill for a professional industrial career in computer science. SWE curricula seem an ideal fit for conveying such capabilities, yet they require some extensions, especially given that even at flagship conferences like ICSE, only slightly more than one-third of the technical papers (at the 2021 edition) receive recognition for artefact reusability. Knowledge and capabilities in setting up engineering environments that allow for reproducing artefacts and results over decades (a standard requirement in many traditional engineering disciplines), writing semi-literate commit messages that document crucial steps of a decision-making process and that are tightly coupled with code, or sustainably taming dynamic, quickly changing software dependencies, to name a few: They all contribute to solving the scientific reproducibility crisis, and enable software engineers to build sustainable, long-term maintainable, software-intensive, industrial systems. We propose to teach these skills at the undergraduate level, on par with traditional SWE topics.

Die Bayerische Forschungsallianz hat Gastwissenschaftleraufenthalte am FORTH-Institut in Kreta der Universität Ioannina bewilligt. Prof. Dr. Wolfgang Mauerer wird seine Software-Engineering- und Reproduzierbarkeitsexpertise in ein Projekt zur Schemaevolution in Datenbanken einbringen.

Peel | Pile? Cross-Framework Portability of Quantum Software was accepted at the QSA@ICSA 2022.

Abstract: In recent years, various vendors have made quantum software frameworks available. Yet with vendor-specific frameworks, code portability seems at risk, especially in a field where hardware and software libraries have not yet reached a consolidated state, and even foundational aspects of the technologies are still in flux. Accordingly, the development of vendor-independent quantum programming languages and frameworks is often suggested. This follows the established architectural pattern of introducing additional levels of abstraction into software stacks, thereby piling on layers of abstraction. Yet software architecture also provides seemingly less abstract alternatives, namely to focus on hardware-specific formulations of problems that peel off unnecessary layers. In this article, we quantitatively and experimentally explore these strategic alternatives, and compare popular quantum frameworks from the software implementation perspective. We find that for several specific, yet generalisable problems, the mathematical formulation of the problem to be solved is not just sufficiently abstract and serves as precise description, but is likewise concrete enough to allow for deriving framework-specific implementations with little effort. Additionally, we argue, based on analysing dozens of existing quantum codes, that porting between frameworks is actually low-effort, since the quantum- and framework-specific portions are very manageable in terms of size, commonly in the order of mere hundreds of lines of code. Given the current state-of-the-art in quantum programming practice, this leads us to argue in favour of peeling off unnecessary abstraction levels.

Projektvolumen: 8,2 Millionen EUR, Konsortialführer: Wolfgang Mauerer.

Die zunehmende Massenproduktion individualisierter Güter und die dafür notwendige komplexe Logistik innerhalb moderner Fabriken erfordern die Lösung umfangreicher Optimierungsprobleme in Echtzeit. Klassische Computer können solche Probleme nicht ausreichend gut lösen. In diesem Projekt sollen daher hybride, quanten-klassische Algorithmen entworfen werden. Diese befähigen die demnächst verfügbaren Quantencomputer mit einigen 10 Qubits zur Lösung dieser Probleme beizutragen. Dies erfolgt durch die Integration von angepassten Quantenprozessoren (QPUs) in bestehende Szenarien, und durch Erweiterung bestehender Methoden der Fabrikautomation und Produktionsplanung.

Durch den Fokus auf lokale Datenverarbeitung direkt im Betrieb statt durch Nutzung externer Cloud-Dienste wird die Notwendigkeit vermieden, grundlegende Kenntnisse und Daten zur Produktionslaufzeit mit Dritten zu teilen. Zudem treten bei zeitkritischen Berechnungen keine Verzögerungen durch Datenübertragungen auf. Ausgehend von der Annahme, dass geeignete maßgefertigte QPUs mittelfristig verfügbar sein werden, befasst sich das Projekt mit dem Mangel an Quantenalgorithmen zur Optimierung von Fertigungsaufgaben, der fehlenden Integration des Quantencomputing in industrielle Prozesse und der Zugänglichkeit zur Technologie für Anwender, denen die Resultate ohne tiefe quantenmechanische und quanteninformatische Kenntnisse zugänglich gemacht werden sollen.

Durch die systematische Übertragung realer Problemstellungen auf Verfahren, die Vorteile von Quantenalgorithmen mit Vorteilen klassischer Algorithmen kombinieren, sollen industriell verwertbare Anwendungsfälle erfolgreich gelöst werden. Perspektivisch lassen sich die in diesem Projekt entwickelten Algorithmen zukünftig auch auf leistungsstärkeren Quantencomputern ausführen und erweitern, sodass noch komplexere Optimierungen von Produktionsprozessen möglich werden, die die Produktivität und Wettbewerbsfähigkeit der Unternehmen weiter steigern (Textquelle: BMBF).

Abstract: Computer-based automation in industrial appliances led to a growing number of logically dependent, but physically separated embedded control units per appliance. Many of those components are safety-critical systems, and require adherence to safety standards, which is inconsonant with the relentless demand for features in those appliances. Features lead to a growing amount of control units per appliance, and to a increasing complexity of the overall software stack, being unfavourable for safety certifications. Modern CPUs provide means to revise traditional separa- tion of concerns design primitives: the consolidation of systems, which yields new engineering challenges that concern the entire software and system stack.

Multi-core CPUs favour economic consolidation of formerly separated systems with one efficient single hardware unit. Nonetheless, the system architecture must provide means to guarantee the freedom from interference between domains of different criticality. System consolidation demands for architectural and engineering strategies to fulfil requirements (e.g., real-time or certifiability criteria) in safety-critical environments.